IXmongodb

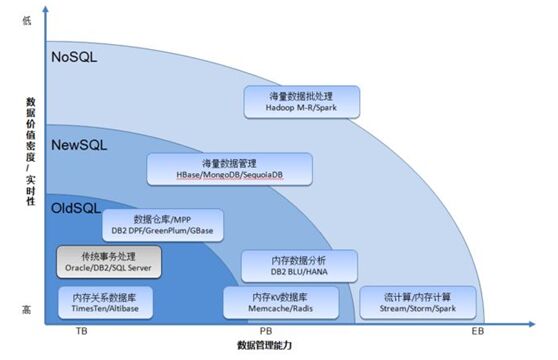

NoSQL,not only sql;

bigdata大數據存儲問題:

並行數據庫(水平切分;分區查詢);

NoSQL數據庫管理系統:

非關係模型;

分佈式;

不支持ACID數據庫設計範式;

簡單數據模型;

元數據和數據分離;

弱一致性;

高吞吐量;

高水平擴展能力和低端硬件集羣;

代表(clustrix;GenieDB;ScaleBase;NimbusDB;Drizzle);

雲數據管理(DBaaS);

大數據的分析處理(MapReduce);

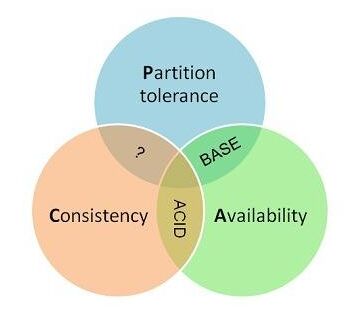

CAP:

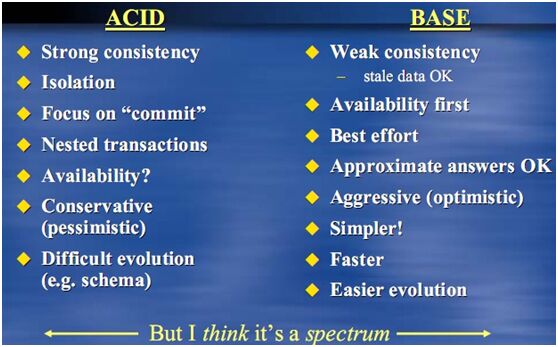

ACID vs BASE:

atomicity basically available

consistency soft state

isolation eventually

durability consistent

注:

最終一致性細分:

因果一致性;

讀自己寫一致性;

會話一致性;

單調讀一致性;

時間軸一致性;

注:

ACID(強一致性;隔離性;採用悲觀保守的方法,難以變化);

BASE(弱一致性;可用性優先;採用樂觀的方法,適用變化,更簡單、更快);

數據一致性的實現技術:

NRW策略(

N,number,所有副本數;

R,read,完成r副本數;

W,write,完成w副本數;

R+W>N(強一致);

R+W<N(弱一致);

);

2PC;

PaxOS;

Vector clock(向量時鐘);

http://www.nosql-database.org/

數據存儲模型(各流派根據此模型來劃分):

列式存儲模型(

應用場景:在分佈式FS之上,支持隨機rw的分佈式數據存儲;

典型產品:HBase、Hypertable、Cassandra;

數據模型:以列爲中心存儲,將同一列數據存儲在一起;

優點:快速查詢,高可擴展性,易於實現分佈式擴展;

);

文檔模型(

應用場景:非強事務要求的web應用;

典型產品:MongoDB、ElasticSearch、CouchDB、CouchDB Server);

數據模型:鍵值模型,存儲爲文檔;

優點:數據模型無須事先定義;

);

鍵值模型(

應用場景:內容緩存,用於大量並行數據訪問的高負載場景;

典型產品:redis、DynamoDB(amazon)、Azure、Table Storage、Riak;

數據模型:基於hash表實現的key-value;

優點:查詢迅速;

);

圖式模型(

應用場景:社交網絡、推薦系統、關係圖譜;

典型產品:Neo4j、Infinite Graph;

數據模型:圖式結構;

優點:適用於圖式計算場景;

);

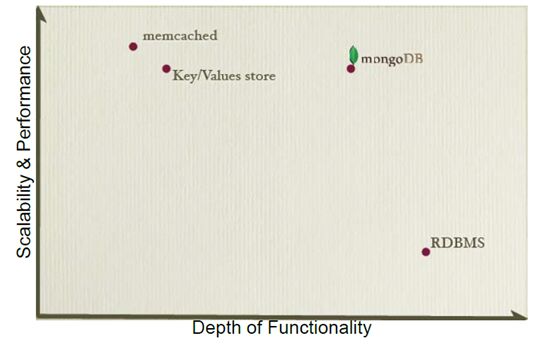

introduction mongodb:

MongoDB(from humongous) is a scalable,high-performance, open source, schema free , document nosql oriented database;

what is MongoDB?

humongous(huge+monstrous);

document database(document oriented databaase: uses JSON(BSON actually);

schema free;

C++;

open source;

GNU AGPL V3.0 License;

OSX,Linux,windows,solaris|32bi,64bit;

development and support by 10gen and was first released in February 2009;

NoSQL;

performance(written C++,full index support,no transactions(has atomic operations)支持原子事務, memory-mapped files(delayed writes));

saclable(replication,auto-sharding);

commercially supported(10gen): lots of documentation;

document-based queries:

flexible document queries expressed in JSON/Javascript;

Map/Reduce:(flexible aggregation and data processing; queries run in parallel on all shards);

gridFS(store files of any size easily);

geospatial indexing(find object based on location(i.e.find closes n items to x));

many production deloyments;

features:

collection oriented storage: easy storageof object/JSON-style data;

dynamic queries;

full index support,including on inner objects and embedded arrays;

query profiling;

replication and fail-over support;

efficient storage of binary data including large objects(e.g. photos and videos);

auto-sharding for cloud-level scalability(currently in alpha);

great for(websites; caching; high volument;low value; high scalability; storage of program objects and json);

not as great for(highly transactional;Ad-hoc business intelligence; problems requiring SQL);

注:

JSON,java script object notation,名稱/值對象的集合;值的有序列表;

DDL;

DML

https://www.mongodb.com/

http://downloads-distro.mongodb.org/repo/redhat/os/x86_64/RPMS/

mongodb-org-mongos-2.6.4-1.x86_64.rpm

mongodb-org-server-2.6.4-1.x86_64.rpm

mongodb-org-shell-2.6.4-1.x86_64.rpm

mongodb-org-tools-2.6.4-1.x86_64.rpm

]# yum -y install mongodb-org-server-2.6.4-1.x86_64.rpm mongodb-org-shell-2.6.4-1.x86_64.rpmmongodb-org-tools-2.6.4-1.x86_64.rpm

]# rpm -qi mongodb-org-server #(This package contains the MongoDB server software, default configuration files, and init.d scripts.)

<!--Description :

MongoDB is built for scalability,performance and high availability, scaling from single server deployments tolarge, complex multi-site architectures. By leveraging in-memory computing,MongoDB provides high performance for both reads and writes. MongoDB’s nativereplication and automated failover enable enterprise-grade reliability andoperational flexibility.

MongoDB is an open-source database used bycompanies of all sizes, across all industries and for a wide variety ofapplications. It is an agile database that allows schemas to change quickly asapplications evolve, while still providing the functionality developers expectfrom traditional databases, such as secondary indexes, a full query languageand strict consistency.

MongoDB has a rich client ecosystemincluding hadoop integration, officially supported drivers for 10 programminglanguages and environments, as well as 40 drivers supported by the usercommunity.

MongoDB features:

* JSON Data Model with Dynamic Schemas

* Auto-Sharding for Horizontal Scalability

* Built-In Replication for HighAvailability

* Rich Secondary Indexes, includinggeospatial

* TTL indexes

* Text Search

* Aggregation Framework & NativeMapReduce

-->

]# rpm -qi mongodb-org-shell #(This package contains the mongo shell.)

]# rpm -qi mongodb-org-tools #(This package contains standard utilities for interacting with MongoDB.)

]# mkdir -pv /mongodb/data

mkdir: created directory `/mongodb'

mkdir: created directory `/mongodb/data'

]# id mongod

uid=497(mongod) gid=497(mongod)groups=497(mongod)

]# chown -R mongod.mongod /mongodb/

]# vim /etc/mongod.conf

#dbpath=/var/lib/mongo

dbpath=/mongodb/data

bind_ip=172.168.101.239

#httpinterface=true

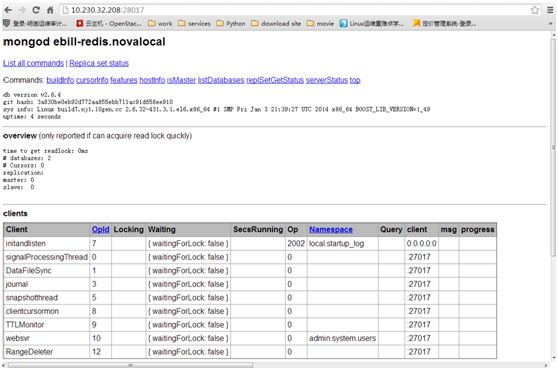

httpinterface=true #28017

]# /etc/init.d/mongod start

Starting mongod: /usr/bin/dirname: extraoperand `2>&1.pid'

Try `/usr/bin/dirname --help' for moreinformation.

[ OK ]

]# vim /etc/init.d/mongod #(解決2>&1.pid問題)

#daemon --user "$MONGO_USER" "$NUMACTL $mongod $OPTIONS>/dev/null 2>&1"

daemon --user "$MONGO_USER" "$NUMACTL $mongod $OPTIONS>/dev/null"

]# /etc/init.d/mongod restart

Stopping mongod: [ OK ]

Starting mongod: [ OK ]

]# ss -tnl | egrep "27017|28017"

LISTEN 0 128 127.0.0.1:27017 *:*

LISTEN 0 128 127.0.0.1:28017 *:*

]# du -sh /mongodb/data

3.1G /mongodb/data

]# ll -h !$

ll -h /mongodb/data

total 81M

drwxr-xr-x 2 mongod mongod 4.0K Jun 2916:23 journal

-rw------- 1 mongod mongod 64M Jun 29 16:23 local.0

-rw------- 1 mongod mongod 16M Jun 29 16:23 local.ns

-rwxr-xr-x 1 mongod mongod 6 Jun 29 16:23 mongod.lock

]# mongo --host 172.168.101.239 #(默認在test庫)

MongoDB shell version: 2.6.4

connecting to: 172.168.101.239:27017/test

Welcome to the MongoDB shell.

……

> show dbs

admin (empty)

local 0.078GB

> show collections

> show users

> show logs

global

> help

db.help() help on db methods

db.mycoll.help() help on collection methods

sh.help() sharding helpers

rs.help() replica set helpers

……

> db.help()

……

> db.stats() #(數據庫狀態)

{

"db": "test",

"collections": 0,

"objects": 0,

"avgObjSize": 0,

"dataSize": 0,

"storageSize": 0,

"numExtents": 0,

"indexes": 0,

"indexSize": 0,

"fileSize": 0,

"dataFileVersion": {

},

"ok": 1

}

> db.version()

2.6.4

> db.serverStatus() #(mongodb數據庫服務器狀態)

……

> db.getCollectionNames()

[ ]

> db.mycoll.help()

……

> use testdb

switched to db testdb

> show dbs

admin (empty)

local 0.078GB

test (empty)

> db.stats()

{

"db": "testdb",

"collections": 0,

"objects": 0,

"avgObjSize": 0,

"dataSize": 0,

"storageSize": 0,

"numExtents": 0,

"indexes": 0,

"indexSize": 0,

"fileSize": 0,

"dataFileVersion": {

},

"ok": 1

}

> db.students.insert({name:"tom",age: 22})

WriteResult({ "nInserted" : 1 })

> show collections

students

system.indexes

> show dbs

admin (empty)

local 0.078GB

test (empty)

testdb 0.078GB

> db.students.stats()

{

"ns": "testdb.students",

"count": 1,

"size": 112,

"avgObjSize": 112,

"storageSize": 8192,

"numExtents": 1,

"nindexes": 1,

"lastExtentSize": 8192,

"paddingFactor": 1,

"systemFlags": 1,

"userFlags": 1,

"totalIndexSize": 8176,

"indexSizes": {

"_id_": 8176

},

"ok": 1

}

> db.getCollectionNames()

[ "students","system.indexes" ]

> db.getCollectionNames()

[ "students","system.indexes" ]

> db.students.insert({name:"jerry",age: 40,gender: "M"})

WriteResult({ "nInserted" : 1 })

> db.students.insert({name:"ouyang",age:80,course: "hamagong"})

WriteResult({ "nInserted" : 1 })

> db.students.insert({name: "yangguo",age:20,course: "meivnquan"})

WriteResult({ "nInserted" : 1 })

> db.students.insert({name:"guojing",age: 30,course: "xianglong"})

WriteResult({ "nInserted" : 1 })

> db.students.find()

{ "_id" :ObjectId("5955b505853f9091c4bf4056"), "name" :"tom", "age" : 22 }

{ "_id" :ObjectId("595b3816853f9091c4bf4057"), "name" :"jerry", "age" : 40, "gender" : "M" }

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

{ "_id" :ObjectId("595b384f853f9091c4bf4059"), "name" :"yangguo", "age" : 20, "course" :"meivnquan" }

{ "_id" :ObjectId("595b3873853f9091c4bf405a"), "name" :"guojing", "age" : 30, "course" :"xianglong" }

> db.students.count()

5

mongodb documents:

mongodb stores data in the form of documents,which are JSON-like field and value pairs;

documents are analogous to structures in programming languages that associate keys with values,where keys may hold other pairs of keys and values(e.g. dictionaries,hashes,maps,and associative arrays);

formally,mongodb documents are BSON documents,which is a binary representation of JSON with additional type information;

field:value;

document:

stored in collection,think record or row;

can have _id key that works like primarykey in MySQL;

tow options for relationships: subdocumentor db reference;

mongodb collections:

mongodb stores all documents in collections;

a collection is a group of related documents that have a set of shared common indexes;

collections are analogous to a table inrelational databases;

collection:

think table,but with no schema;

for grouping into smaller query sets(speed);

each top entity in your app would have its own collection(users,articles,etc.);

full index support;

database operations: query

in mongodb a query targets a specific collection of documents;

queries specify criteria,or conditions,that identify the documents that mongodb returns to the clients;

a query may include a projection that specifies the fields from the matchina documents to return;

you can optionally modify queries to impose limits,skips,and sort orders;

如:

db.users.find({age: {$gt: 18}}).sort({age:1}})

db.COLLECTION.find({FIELD: {QUERYCRITERIA}}).MODIFIER

query interface:

for query operations,mongodb provide adb.collection.find() method;

the method accepts both the query criteria and projections and returns a cursor to the matching documents

query behavior:

all queries in mongodb address a single collection;

you can modify the query to impose limits,skips,and sort orders;

the order of documents returned by a queryis not defined and is not necessarily consistent unless you specify a sort();

operations that modify existing documents(i.e. updates) use the same query syntax as queries to select documents to update;

in aggregation pipeline,the $match pipelinestage provides access to mongodb queries;

data modification:

data modification refers to operations that create,update,or delete data;

in mongodb,these operations modify the data of a single collection;

all write operations in mongodb are atomicon the level of a single document;

for the update and delete operations,you can specify the criteria to select the documents to update or remove;

RDBMS vs mongodb

table,view collection

row jsondocument

index index

join embedded

partition shard

partition key shard key

db.collection.find(<query>,<projection>)

類似於sql中的select語句,其中<query>相當於where子句,而<projection>相當於要選定的字段;

如果使用的find()方法中不包含<query>,則意味着要返回對應collection的所有文檔;

db.collection.count()方法可統計指定collection中文檔的個數;

舉例:

>use testdb

> for (i=1;i<=100;i++)

{ db.testColl.insert({hostname:"www"+i+".magedu.com"})}

>db.testColl.find({hostname: {$gt:"www95.magedu.com"}})

find的高級用法:

mongodb的查詢操作支持挑選機制有comparison,logical,element,javascript等幾類;

比較運算comparison:

$gt;$gte;$lt;$lte;$ne,語法格式:{field:{$gt: VALUE}}

舉例:

> db.students.find({age: {$gt: 30}})

{ "_id" :ObjectId("595b3816853f9091c4bf4057"), "name" :"jerry", "age" : 40, "gender" : "M" }

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

$in;$nin,語法格式:{field: {$in:[VALUE1,VALUE2]}}

舉例:

> db.students.find({age: {$in:[20,80]}})

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

{ "_id" :ObjectId("595b384f853f9091c4bf4059"), "name" :"yangguo", "age" : 20, "course" :"meivnquan" }

> db.students.find({age: {$in:[20,80]}}).limit(1)

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

> db.students.find({age: {$in:[20,80]}}).count()

2

> db.students.find({age: {$in: [20,80]}}).skip(1)

{ "_id" :ObjectId("595b384f853f9091c4bf4059"), "name" :"yangguo", "age" : 20, "course" :"meivnquan" }

組合條件:

邏輯運算:$or或運算;$and與運算;$not非運算;$nor反運算,語法格式:{$or: [{<expression1>},{expression2}]}

舉例:

> db.students.find({$or: [{age: {$nin:[20,40]}},{age: {$nin: [22,30]}}]})

{ "_id" :ObjectId("5955b505853f9091c4bf4056"), "name" :"tom", "age" : 22 }

{ "_id" : ObjectId("595b3816853f9091c4bf4057"),"name" : "jerry", "age" : 40, "gender": "M" }

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

{ "_id" :ObjectId("595b384f853f9091c4bf4059"), "name" :"yangguo", "age" : 20, "course" :"meivnquan" }

{ "_id" :ObjectId("595b3873853f9091c4bf405a"), "name" :"guojing", "age" : 30, "course" :"xianglong" }

> db.students.find({$and: [{age: {$nin:[20,40]}},{age: {$nin: [22,30]}}]})

{ "_id" :ObjectId("595b3835853f9091c4bf4058"), "name" :"ouyang", "age" : 80, "course" :"hamagong" }

元素查詢:

根據文檔中是否存在指定的字段進行的查詢,$exists;$mod;$type;

$exists,根據指定字段的存在性挑選文檔,語法格式:{field:{$exists: <boolean>}},true則返回存在指定字段的文檔,false則返回不存在指定字段的文檔

$mod,將指定字段的值進行取模運算,並返回其餘數爲指定值的文檔,語法格式:{field: {$mod: [divisor,remainder]}}

$type返回指定字段的值類型爲指定類型的文檔,語法格式:{field: {$type: <BSON type>}}

注:double,string,object,array,binarydata,undefined,boolean,date,null,regular expression,javascript,timestamp

更新操作:

update()方法可用於更改collection中的數據,默認情況下,update()只更新單個文檔,若要一次更新所有符合指定條件的文檔,則要使用multi選項;

語法格式:db.collection.update(<query>,<update>,<options>),其中query類似於sql語句中的where,而<update>相當於附帶了LIMIT 1的SET,如果在<options>處提供multi選項,則update語句類似於不帶LIMIT語句的update();

<update>參數的使用格式比較獨特,其僅能包含使用update專有操作符來構建的表達式,其專有操作符大致包含field,array,bitwise,其中filed類常用的操作有:

$set,修改字段的值爲新指定的值,語法格式:({field:value},{$set: {field: new_value}})

$unset,刪除指定字段,語法格式:({field:value},{$unset: {field1,field2,...}})

$rename,更改字段名,語法格式:($rename:{oldname: newname,oldname: newname})

$inc,增大指定字段的值,語法格式:({field:value},{$inc: {field1: amount}}),其中{field: value}用於指定挑選標準,{$inc: {field1: amount}用於指定要提升其值的字段及提升大小

刪除操作:

> db.students.remove({name:"tom"}) #(db.mycoll.remove(query))

WriteResult({ "nRemoved" : 1 })

> db.collections

testdb.collections

> show dbs

admin (empty)

local 0.078GB

test (empty)

testdb 0.078GB

> db.students.drop() #(db.mycoll.drop(),drop thecollection)

true

> db.dropDatabase() #(刪除database)

{ "dropped" : "testdb","ok" : 1 }

> show dbs

admin (empty)

local 0.078GB

test (empty)

> quit()

索引:

索引類型:

B+Tree;

hash;

空間索引;

全文索引;

indexes are special data structures that store a small portion of the collection's set in an easy to traverse form: theindex stores the value of a specific field or set of fields,ordered by thevalue of the field;

mongodb defines indexes at the collection level and supports indexes on any field or sub-field of the documents in amongodb collection;

mongodb的索引類型:

single field indexes單字段索引:

a single field index only includes datafrom a single field of the documents in a collection;

mongodb supports single field indexes on fields at the top level of a document and on fields in sub-documents;

compound indexes組合索引:

a compound index includes more than onfield of the documents in a collection;

multikey indexes多鍵索引:

a multikey index references an array and records a match if a query includes any value in the array;

geospatial indexes and queries空間索引;

geospatial indexes support location-based searches on data that is stored as either geo JSON objects or legacy coordinate pairs;

text indexes文本索引:

text indexes supports search of string content in documents;

hashed indexes,hash索引:

hashed indexes maintain entries with hashes of the values of the indexed field;

index creation:

mongodb provides several options that only affect the creation of the index;

specify these options in a document as the second argument to the db.collection.ensureIndex() method:

unique index:db.addresses.ensureIndex({"user_id": 1},{unique: true})

sparse index:db.addresses.ensureIndex({"xmpp_id": 1},{sparse: true})

mongodb與索引相關的方法:

db.mycoll.ensureIndex(field[,options]) #創建索引options有name,unique,dropDups,sparse(稀疏索引)

db.mycoll.dropIndex(INDEX_NAME) #刪除索引

db.mycoll.dropIndexes()

db.mycoll.getIndexes()

db.mycoll.reIndex() #重建索引

> show dbs;

admin (empty)

local 0.078GB

test (empty)

> use testdb;

switched to db testdb

> for(i=1;i<=1000;i++){db.students.insert({name: "student"+i,age:(i%120),address: "#85 LeAi Road,ShangHai,China"})}

WriteResult({ "nInserted" : 1 })

> db.students.count()

1000

> db.students.find() #(每次顯示20行,用it繼續查看後20行)

Type "it" for more

> db.students.ensureIndex({name: 1})

{

"createdCollectionAutomatically": false,

"numIndexesBefore": 1,

"numIndexesAfter": 2,

"ok": 1

}

> db.studens.getIndexes() #當前collect的index個數,索引名爲name_1

[

{

"v": 1,

"key": {

"_id": 1

},

"name": "_id_",

"ns": "test.students"

},

{

"v": 1,

"key": {

"name": 1

},

"name": "name_1",

"ns": "test.students"

}

]

>db.students.dropIndex("name_1") #刪除索引,索引名要用引號

{ "nIndexesWas" : 2,"ok" : 1 }

> db.students.ensureIndex({name:1},{unique: true}) #唯一鍵索引

{

"createdCollectionAutomatically": false,

"numIndexesBefore": 1,

"numIndexesAfter": 2,

"ok": 1

}

> db.students.getIndexes()

[

{

"v": 1,

"key": {

"_id": 1

},

"name": "_id_",

"ns": "test.students"

},

{

"v": 1,

"unique": true,

"key": {

"name": 1

},

"name": "name_1",

"ns": "test.students"

}

]

> db.students.insert({name:"student20",age: 20}) #在唯一鍵索引的基礎上,再次插入相同的field時會報錯

WriteResult({

"nInserted": 0,

"writeError": {

"code": 11000,

"errmsg": "insertDocument :: caused by :: 11000 E11000 duplicate key error index:test.students.$name_1 dup key: { :\"student20\" }"

}

})

> db.students.find({name:"student500"})

{ "_id" : ObjectId("595c3a9bcf42a9afdbede7c0"),"name" : "student500", "age" : 20,"address" : "#85 LeAi Road,ShangHai,China" }

> db.students.find({name:"student500"}).explain() #顯示執行過程

{

"cursor": "BtreeCursor name_1",

"isMultiKey": false,

"n": 1,

"nscannedObjects": 1,

"nscanned": 1,

"nscannedObjectsAllPlans": 1,

"nscannedAllPlans": 1,

"scanAndOrder": false,

"indexOnly": false,

"nYields": 0,

"nChunkSkips": 0,

"millis": 0,

"indexBounds": {

"name": [

[

"student500",

"student500"

]

]

},

"server": "ebill-redis.novalocal:27017",

"filterSet": false

}

> db.students.find({name: {$gt:"student500"}}).explain()

{

"cursor": "BtreeCursor name_1",

"isMultiKey": false,

"n": 552,

"nscannedObjects": 552,

"nscanned": 552,

"nscannedObjectsAllPlans": 552,

"nscannedAllPlans": 552,

"scanAndOrder": false,

"indexOnly": false,

"nYields": 4,

"nChunkSkips": 0,

"millis": 1,

"indexBounds": {

"name": [

[

"student500",

{

}

]

]

},

"server": "ebill-redis.novalocal:27017",

"filterSet": false

}

> db.getCollectionNames()

[ "students","system.indexes" ]

]# mongod -h #分General options;Replicationoptions;Master/slave options;Replica set options;Sharding options;

--bind_ip arg commaseparated list of ip addresses to listen on

- all local ipsby default

--port arg specify port number - 27017 by default

--maxConns arg maxnumber of simultaneous connections - 1000000

by default,併發最大連接數

--logpath arg logfile to send write to instead of stdout - has

to be a file, notdirectory

--httpinterface enablehttp interface,web頁面監控mongodb統計信息

--fork forkserver process,mongod是否運行在後臺

--auth runwith security,使用認證才能訪問>db.createUser(Userdocument),>db.auth(user,password)

--repair runrepair on all dbs,上次未正常關閉,用於再啓動時修復

--journal enablejournaling,啓用事務日誌,先將日誌順序寫到事務日誌再每隔一段時間同步到數據日誌文件,將隨機IO-->順序IO提高性能,單實例的mongodb此選項一定要打開,這日保證數據日持久的

--journalOptions arg journal diagnostic options

--journalCommitInterval arg how often to group/batch commit (ms)

調試選項:

--cpu periodically show cpu and iowait utilization

--sysinfo print some diagnostic systeminformation

--slowms arg (=100) valueof slow for profile and console log,超過此值的查詢都日慢查詢

--profile arg 0=off1=slow, 2=all,性能剖析

Replication options:

--oplogSize arg size to use(in MB) for replication op log. default is

5% of disk space (i.e.large is good)

Master/slave options (old; use replica setsinstead):

--master master mode

--slave slave mode

--source arg when slave:specify master as <server:port>

--only arg when slave:specify a single database to replicate

--slavedelay arg specifydelay (in seconds) to be used when applying

master ops to slave

--autoresync automatically resync if slave data is stale

Replica set options:

--replSet arg arg is<setname>[/<optionalseedhostlist>]

--replIndexPrefetch arg specify index prefetching behavior (if secondary)

[none|_id_only|all]

Sharding options:

--configsvr declare thisis a config db of a cluster; default port

27019; default dir/data/configdb

--shardsvr declare thisis a shard db of a cluster; default port

27018

mongodb的複製:

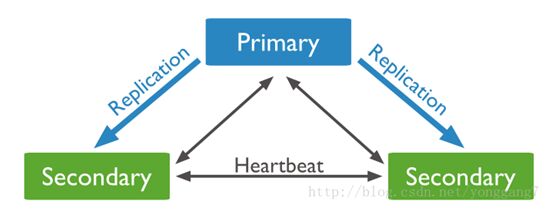

兩種類型(master/slave;replica sets):

master/slave,與mysql近似,此方式已很少用;

replica sets:

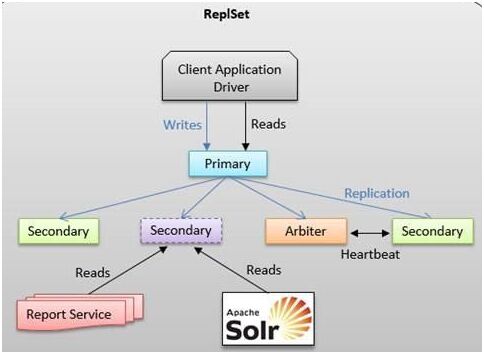

一般爲奇數個節點(至少3個節點),可使用arbiter參與選舉;

heartbeat2S,通過選舉方式實現自動失效轉移;

複製集(副本集),可實現自動故障轉移,易於實現更高級的部署操作,服務於同一數據集的多個mongodb實例,一個複製集只能有一個主節點(rw)其它都是從節點(r),主節點將數據修改操作保存在oplog中;

arbiter仲裁者,一般至少3個節點,一主兩從,從每隔2s與主節點傳遞一次heartbeat信息,若從在10s內監測不到主節點心跳,會自動在兩從節點上重新選舉一個爲主節點;

replica sets中的節點分類:

0優先級的節點,不會被選舉爲主節點,但可參與選舉過程,冷備節點,可被client讀);

被隱藏的從節點,首先是一個0優先級的從節點,且對client不可見;

延遲複製的從節點,首先是一個0優先級的從節點,且複製時間落後於主節點一個固定時長;

arbiter仲裁節點;

mongodb的複製架構:

oplog,大小固定的文件,存儲在local數據庫中,複製集中,僅主可在本地寫oplog,即使從有oplog也不會被使用,只有在從被選舉爲主時纔可在本地寫自己的oplog,mysql的二進制日誌隨着使用會越來越大,而oplog大小固定,在首次啓動mongodb時會劃分oplog大小(oplog.rs),一般爲disk空間的5%,若5%的結果小於1G,則至少被指定爲1G;

從節點有如下類型對應主節點的操作:

initial sync初始同步;

post-rollback catch-up回滾後追趕;

sharding chunk migrations切分塊遷移;

注:

local庫存放了複製集的所有元數據和oplog,local庫不參與複製過程,除此之外其它庫都被複制到從節點,用於存儲oplog的是一個名爲oplog.rs的collection,當一個實例加入某複製集後,在第一次啓動時被自動創建oplog.rs,oplog.rs的大小依賴於OS及FS,可用選項--oplogSize自定義大小;

> show dbs

admin (empty)

local 0.078GB

test 0.078GB

testdb (empty)

> use local

switched to db local

> show collections

startup_log

system.indexes

mongodb的數據同步類型:

initial sync初始同步,節點沒有任何數據,節點丟失副本,複製歷史,初始同步的步驟:克隆所有數據庫、應用數據集的所有改變(複製oplog並應用在本地)、爲所有collection構建索引;

複製,主上寫一點會同步到從;

注:

一致性(僅主可寫);

--journal,保證數據持久性,單實例的mongodb一定要打開;

支持多線程複製,對於單個collection僅可單線程複製(在同一個namespace內依然是單線程);

可實現索引預取(數據預取),提高性能;

purpose of replication:

replication provides redundancy and increases data availability;

with multiple copies of data on different database servers,replication protects a database from the loss of a single server;

replication also allows you to recover from hardware failure and service interruptions;

with additional copies of the data,you candedicate one to disaster recovery,reporting,or backup;

replication in mongodb:

a replica set is a group of mongod instances that host the same data set:

one mongod,the primary,receives all writeoperations ;

all other instances,secondaries,apply operations from the primary so that they have the same data set;

the primary accepts all write operations from clients,replica set can have only one primary:

because only one member can accept write opertations,replica sets provide strict consistency;

to support replication,the primary logs all changes to its data sets in its oplog;

the secondaries replicate the primary's oplog and apply the operations to their data sets;

secondaries data sets reflect the primary'sdata set;

if the primary is unavailable,the replica set will elect a secondary to be primary;

by default,clients read from the primary,however,clients can specify a read preferences to send read operations to secondaries;

arbiter:

you may add an extra mongod instance areplica set as an arbiter;

arbiters do not maintain a dataset,arbiters only exist to vote in elections;

if your replica set has an even number of members,add an arbiter to obtain a majority of votes in an election for primary;

arbiters do not require dedicated hardware;

automatic failover:

when a primary does not communicate with the other members of the set for more than 10 seconds,the replica set will attempt to select another member to become the new primary;

the first secondary that receives a majority of the votes becomes primary;

priority 0 replica set members:

a priority 0 member is a secondary that cannot become primary;

priority 0 members cannot trigger elections:

otherwise these members function as normal secondaries;

a priority 0 member maintains a copy of the data set,accepts read opertaions,and votes in elections;

configure a priority 0 member to prevent secondaries from becoming primary,which is particularyly useful in multi-datacenter deployments;

in a three-member replica set, in one datacenter hosts the primary and a secondary;

a second data center hosts one priority 0 member that cannot become primary;

複製集重新選舉的影響條件:

heartbeat;

priority(優先級,默認爲1,0優先級的節點不能觸發選舉操作);

optime;

網絡連接;

網絡分區;

選舉機制:

觸發選舉的事件:

新複製集初始化時;

從節點聯繫不到主節點時;

主節點下臺(不可用)時,>rs.stepdown(),某從節點有更高的優先級且已滿足成爲主節點其它所有條件,主節點無法聯繫到副本集的多數方;

操作:

172.168.101.239 #master

172.168.101.233 #secondary

172.168.101.221 #secondary

]# vim /etc/mongod.conf #(三個節點都用此配置)

fork=true

dbpath=/mongodb/data

bind_ip=172.168.101.239

# in replicated mongo databases, specifythe replica set name here

#replSet=setname

replSet=testSet #(複製集名稱,集羣名稱)

replIndexPrefetch=_id_only #(specify indexprefetching behavior (if secondary) [none|_id_only|all],副本索引預取,使複製更高效,僅在secondary上有效)

]# mongo --host 172.168.101.239 #(在primary上操作)

> show dbs

admin (empty)

local 0.078GB

test 0.078GB

> use local

switched to db local

> show collections

startup_log

system.indexes

> rs.status()

{

"startupStatus": 3,

"info": "run rs.initiate(...) if not yet done for the set",

"ok": 0,

"errmsg": "can't get local.system.replset config from self or any seed(EMPTYCONFIG)"

}

> rs.initiate()

{

"info2": "no configuration explicitly specified -- making one",

"me": "172.168.101.239:27017",

"info": "Config now saved locally. Shouldcome online in about a minute.",

"ok": 1

}

testSet:PRIMARY>rs.add("172.168.101.233:27017")

{ "ok" : 1 }

testSet:PRIMARY> rs.add("172.168.101.221:27017") #(另可用rs.addArb(hostportstr)添加仲裁節點)

{ "ok" : 1 }

testSet:PRIMARY> rs.status()

{

"set": "testSet",

"date": ISODate("2017-07-05T07:22:59Z"),

"myState": 1,

"members": [

{

"_id": 0,

"name": "172.168.101.239:27017",

"health": 1,

"state": 1,

"stateStr": "PRIMARY",

"uptime": 970,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"electionTime": Timestamp(1499238673, 2),

"electionDate": ISODate("2017-07-05T07:11:13Z"),

"self": true

},

{

"_id": 1,

"name": "172.168.101.233:27017",

"health": 1,

"state": 2,

"stateStr": "SECONDARY",

"uptime": 557,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"lastHeartbeat": ISODate("2017-07-05T07:22:57Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:22:57Z"),

"pingMs": 1,

"syncingTo": "172.168.101.239:27017"

},

{

"_id": 2,

"name": "172.168.101.221:27017",

"health": 1,

"state": 2,

"stateStr": "SECONDARY",

"uptime": 536,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"lastHeartbeat": ISODate("2017-07-05T07:22:57Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:22:57Z"),

"pingMs": 0,

"syncingTo": "172.168.101.239:27017"

}

],

"ok": 1

}

testSet:PRIMARY> use testdb

switched to db testdb

testSet:PRIMARY>db.students.insert({name: "tom",age: 22,course: "hehe"})

WriteResult({ "nInserted" : 1 })

]# mongo --host 172.168.101.233 #(在secondary上操作)

testSet:SECONDARY> rs.slaveOk() #(執行此操作後纔可查詢)

testSet:SECONDARY> rs.status()

{

"set": "testSet",

"date": ISODate("2017-07-05T07:24:54Z"),

"myState": 2,

"syncingTo": "172.168.101.239:27017",

"members": [

{

"_id": 0,

"name": "172.168.101.239:27017",

"health": 1,

"state": 1,

"stateStr": "PRIMARY",

"uptime": 669,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"lastHeartbeat": ISODate("2017-07-05T07:24:53Z"),

"lastHeartbeatRecv" :ISODate("2017-07-05T07:24:53Z"),

"pingMs": 0,

"electionTime": Timestamp(1499238673, 2),

"electionDate": ISODate("2017-07-05T07:11:13Z")

},

{

"_id": 1,

"name": "172.168.101.233:27017",

"health": 1,

"state": 2,

"stateStr": "SECONDARY",

"uptime": 1292,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"self": true

},

{

"_id": 2,

"name": "172.168.101.221:27017",

"health": 1,

"state": 2,

"stateStr": "SECONDARY",

"uptime": 649,

"optime": Timestamp(1499238843, 1),

"optimeDate": ISODate("2017-07-05T07:14:03Z"),

"lastHeartbeat": ISODate("2017-07-05T07:24:53Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:24:53Z"),

"pingMs": 5,

"syncingTo": "172.168.101.239:27017"

}

],

"ok": 1

}

testSet:SECONDARY> use testdb

switched to db testdb

testSet:SECONDARY> db.students.find()

{ "_id" :ObjectId("595c95095800d50614819643"), "name" :"tom", "age" : 22, "course" : "hehe" }

]# mongo --host 172.168.101.221 #(在另一secondary上操作)

testSet:SECONDARY> use testdb

switched to db testdb

testSet:SECONDARY> db.students.find() #(未先執行rs.slaveOk())

error: { "$err" : "notmaster and slaveOk=false", "code" : 13435 }

testSet:SECONDARY> rs.slaveOk()

testSet:SECONDARY> use testdb

switched to db testdb

testSet:SECONDARY> db.students.find()

{ "_id" :ObjectId("595c95095800d50614819643"), "name" :"tom", "age" : 22, "course" : "hehe" }

testSet:SECONDARY>db.students.insert({name: jowin,age: 30,course: "mage"}) #(只可在primary上寫)

2017-07-05T15:37:05.069+0800ReferenceError: jowin is not defined

testSet:PRIMARY> rs.stepDown() #(在primary上操作,step downas primary (momentarily) (disconnects),模擬讓主下線)

2017-07-05T15:38:46.819+0800DBClientCursor::init call() failed

2017-07-05T15:38:46.820+0800 Error: errordoing query: failed at src/mongo/shell/query.js:81

2017-07-05T15:38:46.821+0800 tryingreconnect to 172.168.101.239:27017 (172.168.101.239) failed

2017-07-05T15:38:46.822+0800 reconnect172.168.101.239:27017 (172.168.101.239) ok

testSet:SECONDARY> rs.status()

{

"set": "testSet",

"date": ISODate("2017-07-05T07:39:46Z"),

"myState": 2,

"syncingTo": "172.168.101.221:27017",

"members": [

{

"_id": 0,

"name": "172.168.101.239:27017",

"health": 1,

"state": 2,

"stateStr" :"SECONDARY",

"uptime": 1977,

"optime": Timestamp(1499239689, 1),

"optimeDate": ISODate("2017-07-05T07:28:09Z"),

"infoMessage": "syncing to: 172.168.101.221:27017",

"self": true

},

{

"_id": 1,

"name": "172.168.101.233:27017",

"health": 1,

"state": 2,

"stateStr": "SECONDARY",

"uptime": 1564,

"optime": Timestamp(1499239689, 1),

"optimeDate": ISODate("2017-07-05T07:28:09Z"),

"lastHeartbeat": ISODate("2017-07-05T07:39:45Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:39:45Z"),

"pingMs": 0,

"lastHeartbeatMessage": "syncing to: 172.168.101.239:27017",

"syncingTo": "172.168.101.239:27017"

},

{

"_id": 2,

"name": "172.168.101.221:27017",

"health": 1,

"state": 1,

"stateStr" :"PRIMARY",

"uptime": 1543,

"optime": Timestamp(1499239689, 1),

"optimeDate": ISODate("2017-07-05T07:28:09Z"),

"lastHeartbeat": ISODate("2017-07-05T07:39:46Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:39:46Z"),

"pingMs": 0,

"electionTime": Timestamp(1499240328, 1),

"electionDate": ISODate("2017-07-05T07:38:48Z")

}

],

"ok": 1

}

testSet:SECONDARY> #(在新選舉的primary上操作)

testSet:PRIMARY>

testSet:PRIMARY> rs.status()

……

testSet:PRIMARY>db.printReplicationInfo() #(查看oplog大小及時間)

configured oplog size: 1180.919921875MB

log length start to end: 846secs (0.24hrs)

oplog first event time: Wed Jul 05 2017 15:14:03 GMT+0800 (CST)

oplog last event time: Wed Jul 05 2017 15:28:09 GMT+0800 (CST)

now: Wed Jul 05 201715:42:57 GMT+0800 (CST)

testSet:SECONDARY> quit() #(將172.168.101.239停服務,當前的primary在10S內連不上,會將其health標爲0)

[root@ebill-redis ~]# /etc/init.d/mongodstop

Stopping mongod: [ OK ]

testSet:PRIMARY> rs.status()

{

"set": "testSet",

"date": ISODate("2017-07-05T07:51:58Z"),

"myState": 1,

"members": [

{

"_id": 0,

"name": "172.168.101.239:27017",

"health" : 0,

"state": 8,

"stateStr": "(not reachable/healthy)",

"uptime": 0,

"optime": Timestamp(1499239689, 1),

"optimeDate": ISODate("2017-07-05T07:28:09Z"),

"lastHeartbeat": ISODate("2017-07-05T07:51:57Z"),

"lastHeartbeatRecv": ISODate("2017-07-05T07:44:24Z"),

"pingMs": 0,

"syncingTo": "172.168.101.221:27017"

},

……

testSet:PRIMARY> cfg=rs.conf() #(將172.168.101.233從節點的優先級調爲2,使之觸發選舉時成爲主,立即生效)

{

"_id": "testSet",

"version": 3,

"members": [

{

"_id": 0,

"host": "172.168.101.239:27017"

},

{

"_id": 1,

"host": "172.168.101.233:27017"

},

{

"_id": 2,

"host": "172.168.101.221:27017"

}

]

}

testSet:PRIMARY>cfg.members[1].priority=2

2

testSet:PRIMARY> rs.reconfig(cfg) #(經測試此步驟可不用執行,在執行>cfg.members[1].priority=2,優先級最高的節點將直接成爲primary)

2017-07-05T16:04:10.101+0800DBClientCursor::init call() failed

2017-07-05T16:04:10.103+0800 tryingreconnect to 172.168.101.221:27017 (172.168.101.221) failed

2017-07-05T16:04:10.104+0800 reconnect172.168.101.221:27017 (172.168.101.221) ok

reconnected to server after rs command (whichis normal)

testSet:PRIMARY> cfg=rs.conf() #(添加仲裁節點,若當前的secondary數據已複製完成則不能修改此屬性值,要先>rs.remove(hostportstr)再>rs.addArb(hostportstr)

{

"_id": "testSet",

"version": 6,

"members": [

{

"_id": 0,

"host": "172.168.101.239:27017",

"priority": 3

},

{

"_id": 1,

"host": "172.168.101.233:27017",

"priority": 2

},

{

"_id": 2,

"host": "172.168.101.221:27017"

}

]

}

testSet:PRIMARY>cfg.members[2].arbiterOnly=true

true

testSet:PRIMARY> rs.reconfig(cfg)

{

"errmsg": "exception: arbiterOnly may not change for members",

"code": 13510,

"ok": 0

}

testSet:PRIMARY> rs.printSlaveReplicationInfo()

source: 172.168.101.233:27017

syncedTo:Wed Jul 05 2017 16:08:07 GMT+0800 (CST)

0secs (0 hrs) behind the primary

source: 172.168.101.221:27017

syncedTo:Wed Jul 05 2017 16:08:07 GMT+0800 (CST)

0secs (0 hrs) behind the primary

mongodb sharding:

數據量大導致單機性能不足;

注:

mysql的分片藉助Gizzard(twitter)、HiveDB、MySQLproxy+HSCALE、Hibernate shard(google)、Pyshard;

寫離散、讀要集中;

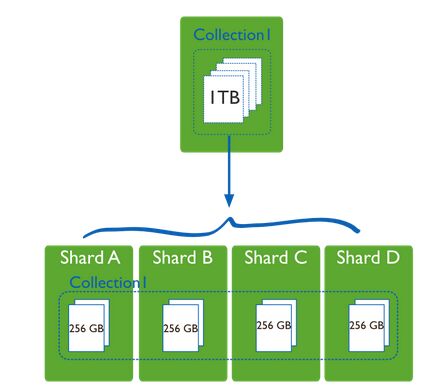

只要是集羣,各node間時間要同步;

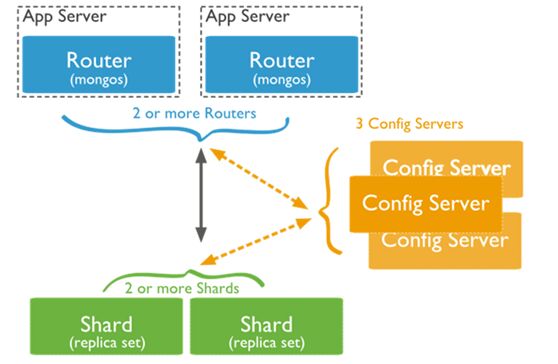

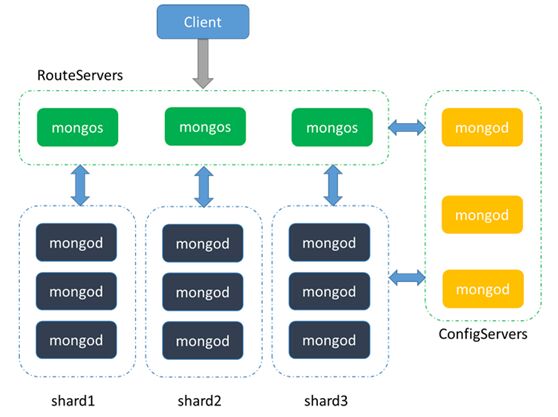

分片架構中的角色:

mongos #router or proxy,生產中mongos要作HA;

config server #元數據服務器,元數據實際是collection的index,生產中configserver3臺,能實現內部選舉;

shard #數據節點,即mongod實例節點,生產中至少3臺;

range #基於範圍切片

list #基於列表分片

hash #基於hash分片

sharding is the process of storing datarecords across multiple machines and is mongdb's approach to meeting thedemands of data growth;

as the size of the data increases,a singlemachine may not be sufficient to store the data nor provide an acceptable readand write throughput;

sharding solves the problem with horizontalscaling;

with sharding,you add more machines tosupport data growth and the demands of read and write operation;

purpose of sharding:

database systems with large data sets andhigh throughput applications can challenge the capacity of a single server;

high query rates can exhaust the cpucapacity of the server;

larger data sets exceed the storagecapacity of a single machine;

finally,working set sizes larger than thesystem's ram stress the IO capacity of disk drives;

sharding in mongodb:

sharded cluster has the following components:shards,query,routers and config server:

shards store the data. to provide high availability and data consistency,in a production sharded cluster,each shard is a replicaset;

query routers,or mongos instances,interface with client applications and direct operations to the appropriate shard orshards;

the query router processes and targets operations to shards and then returns results to the clients;

a sharded cluster can containn more than one query router to divide the client request load;

a client sends requests to one query router;

most sharded cluster have many query routers;

config servers store the clsuter's metadata:

this data contains a mapping of the cluster's data set to the shards;

the query router uses this metadata to target opertations to specific shards;

production sharded clusters have exactly 3 config server;

data pratitioning:

shard keys:

to shard a collection,you need to select ashard key;

a shard key is either an indexed field oran indexed compound field that exists in every document in the collection;

mongodb divides the shard key values into chunks and distributes the chunks evenly across the shards;

to divide the shard key values into chunks,mongodb uses either range based partitioning and hash based partitioning;

range based sharding:

for range-based sharding,mongodb divides the data set into ranges determined by the shard key values to provide range based partitioning;

hash based sharding:

for hash based partitioning,mongodb computes a hash of a field's value,and then uses these hashes to create chunks;

performance distinctions between range and hash based partitioning:

range based partitioning supports more efficient range queries;

however,range based partitioning can result in an uneven distribution of data,which may negate some of the benefits of sharding;

hash based partitioning,by contrast,ensures an even distribution of data at the expense of efficient range queries;

but random distribution makes it more likely that a range query on the shard key will not be able to target a fewshards but would more likely query every shard in order to return a result;

maintaining a balanced data distribution:

mongodb ensures a balanced cluster usingtwo background process: splitting and the balancer

splitting:

a background process that keeps chunks from growing too large;

when a chunk grows beyond a specified chunksize,mongodb splits the chunk in half;

inserts and updates triggers splits;

splits are a efficient meta-data change;

to create splits,mongodb does not migrateany data or affect the shards;

balnacing:

the balancer is a background process that manages chunk migrations;

the balancer runs in all of ther queryrouters in a cluster;

when the distribution of a shardedcollection in a cluster is uneven,the balanacer process migrates chunks fromthe shard that has the largest number of chunks to the shard with the lesatnumber of chunks until the collection balances;

the shards mange chunk migrations as a background operation;

during migration,all requests for a chunks data address the origin shard;

primary shard:

every database has a primary shard that holds all the un-sharded collections in that database;

broadcast operations:

mongos instances broadcast queries to all shards for the collection unless the mogos can determine which shard or subset of shards stores this data;

targeted operations:

all insert() operations target to one shard;

all single update(),including upsert operations,and remove() operations must target to one shard;

sharded cluster metadata:

config servers store the metadata for asharded cluster:

the metadata reflects state and organization of the sharded data sets and system;

the metadata includes the list of chunks one very shard and the ranges that define the chunks;

the mongos instances cache this data anduse it to route read and write operations to shards;

config servers store the metadata in the config database;

操作:

172.168.101.228 #mongos

172.168.101.239 #config server

172.168.101.233&172.168.101.221 #shard{1,2}

在config server操作:

]# vim /etc/mongod.conf

dbpath=/mongodb/data

configsvr=true

bind_ip=172.168.101.239

httpinterface=true

]# /etc/init.d/mongod start

Starting mongod: [ OK ]

]# ss -tnl | egrep "27019|28019"

LISTEN 0 128 172.168.101.239:27019 *:*

LISTEN 0 128 172.168.101.239:28019 *:*

在shard{1,2}操作:

]# vim /etc/mongod.conf

dbpath=/mongodb/data

bind_ip=172.168.101.233

httpinterface=true

]# /etc/init.d/mongod start

Starting mongod: [ OK ]

]# ss -tnl | egrep "27017|28017"

LISTEN 0 128 172.168.101.233:27017 *:*

LISTEN 0 128 172.168.101.233:28017 *:*

在mongos操作:

]# yum -y localinstall mongodb-org-mongos-2.6.4-1.x86_64.rpm mongodb-org-server-2.6.4-1.x86_64.rpm mongodb-org-shell-2.6.4-1.x86_64.rpm mongodb-org-tools-2.6.4-1.x86_64.rpm #(僅mongos節點要安裝mongodb-org-mongos-2.6.4-1.x86_64.rpm,其它節點僅安裝server,shell,tools)

]# mongos --configdb=172.168.101.239:27019 --logpath=/var/log/mongodb/mongos.log --httpinterface --fork

2017-07-06T15:00:32.926+0800 warning:running with 1 config server should be done only for testing purposes and isnot recommended for production

about to fork child process, waiting untilserver is ready for connections.

forked process: 5349

child process started successfully, parentexiting

]# ss -tnl | egrep "27017|28017"

LISTEN 0 128 *:27017 *:*

LISTEN 0 128 *:28017 *:*

]# mongo --host 172.168.101.228

MongoDB shell version: 2.6.4

connecting to: 172.168.101.228:27017/test

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

mongos> sh.help()

……

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id": 1,

"version": 4,

"minCompatibleVersion": 4,

"currentVersion": 5,

"clusterId": ObjectId("595ddf9b52f218b1b6f9fcf2")

}

shards:

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

mongos>sh.addShard("172.168.101.233")

{ "shardAdded" :"shard0000", "ok" : 1 }

mongos>sh.addShard("172.168.101.221")

{ "shardAdded" :"shard0001", "ok" : 1 }

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id": 1,

"version": 4,

"minCompatibleVersion": 4,

"currentVersion": 5,

"clusterId": ObjectId("595ddf9b52f218b1b6f9fcf2")

}

shards:

{ "_id" : "shard0000", "host" :"172.168.101.233:27017" }

{ "_id" : "shard0001", "host" :"172.168.101.221:27017" }

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

mongos>sh.enableSharding("testdb") #(在testdb庫上啓用sharding功能)

{ "ok" : 1 }

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id": 1,

"version": 4,

"minCompatibleVersion": 4,

"currentVersion": 5,

"clusterId": ObjectId("595ddf9b52f218b1b6f9fcf2")

}

shards:

{ "_id" : "shard0000", "host" :"172.168.101.233:27017" }

{ "_id" : "shard0001", "host" : "172.168.101.221:27017"}

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

{ "_id" : "test", "partitioned" : false, "primary" : "shard0001" }

{ "_id" : "testdb", "partitioned" : true, "primary" : "shard0001" }

mongos> for (i=1;i<=10000;i++){db.students.insert({name: "student"+i,age: (i%120),classes:"class"+(i%10),address: "www.magedu.com"})}

WriteResult({ "nInserted" : 1 })

mongos> db.students.find().count()

10000

mongos>sh.shardCollection("testdb.students",{age: 1})

{

"proposedKey": {

"age": 1

},

"curIndexes": [

{

"v": 1,

"key": {

"_id": 1

},

"name": "_id_",

"ns": "testdb.students"

}

],

"ok": 0,

"errmsg": "please create an index that starts with the shard key beforesharding."

}

sh.moveChunk(fullName,find,to) move the chunk where 'find' is to'to' (name of shard),手動均衡,不建議使用

mongos> use admin

switched to db admin

mongos>db.runCommand("listShards")

{

"shards": [

{

"_id": "shard0000",

"host": "172.168.101.233:27017"

},

{

"_id": "shard0001",

"host": "172.168.101.221:27017"

}

],

"ok": 1

}

mongos> sh.isBalancerRunning() #(均衡器僅在工作時才顯示true)

false

mongos> sh.getBalancerState()

true

mongos> db.printShardingStatus() #(同>sh.status())

--- Sharding Status ---

……

注:

> help

db.help() help on db methods

db.mycoll.help() help on collection methods

sh.help() sharding helpers

rs.help() replica set helpers

helpadmin administrativehelp

helpconnect connecting to adb help

helpkeys key shortcuts

helpmisc misc things to know

helpmr mapreduce

showdbs show databasenames

showcollections show collectionsin current database

showusers show users incurrent database

showprofile show most recentsystem.profile entries with time >= 1ms

showlogs show theaccessible logger names

showlog [name] prints out thelast segment of log in memory, 'global' is default

use<db_name> set current database

db.foo.find() list objects in collection foo

db.foo.find({ a : 1 } ) list objects in foo wherea == 1

it result of the lastline evaluated; use to further iterate

DBQuery.shellBatchSize= x set default number of items todisplay on shell

exit quit the mongo shell

> db.help()

DB methods:

db.adminCommand(nameOrDocument)- switches to 'admin' db, and runs command [ just calls db.runCommand(...) ]

db.auth(username,password)

db.cloneDatabase(fromhost)

db.commandHelp(name)returns the help for the command

db.copyDatabase(fromdb,todb, fromhost)

db.createCollection(name,{ size : ..., capped : ..., max : ... } )

db.createUser(userDocument)

db.currentOp()displays currently executing operations in the db

db.dropDatabase()

db.eval(func,args) run code server-side

db.fsyncLock()flush data to disk and lock server for backups

db.fsyncUnlock()unlocks server following a db.fsyncLock()

db.getCollection(cname)same as db['cname'] or db.cname

db.getCollectionNames()

db.getLastError()- just returns the err msg string

db.getLastErrorObj()- return full status object

db.getMongo()get the server connection object

db.getMongo().setSlaveOk()allow queries on a replication slave server

db.getName()

db.getPrevError()

db.getProfilingLevel()- deprecated

db.getProfilingStatus()- returns if profiling is on and slow threshold

db.getReplicationInfo()

db.getSiblingDB(name)get the db at the same server as this one

db.getWriteConcern()- returns the write concern used for any operations on this db, inherited fromserver object if set

db.hostInfo()get details about the server's host

db.isMaster()check replica primary status

db.killOp(opid)kills the current operation in the db

db.listCommands()lists all the db commands

db.loadServerScripts()loads all the scripts in db.system.js

db.logout()

db.printCollectionStats()

db.printReplicationInfo()

db.printShardingStatus()

db.printSlaveReplicationInfo()

db.dropUser(username)

db.repairDatabase()

db.resetError()

db.runCommand(cmdObj)run a database command. if cmdObj is astring, turns it into { cmdObj : 1 }

db.serverStatus()

db.setProfilingLevel(level,<slowms>)0=off 1=slow 2=all

db.setWriteConcern(<write concern doc> ) - sets the write concern for writes to the db

db.unsetWriteConcern(<write concern doc> ) - unsets the write concern for writes to the db

db.setVerboseShell(flag)display extra information in shell output

db.shutdownServer()

db.stats()

db.version()current version of the server

> db.mycoll.help()

DBCollection help

db.mycoll.find().help()- show DBCursor help

db.mycoll.count()

db.mycoll.copyTo(newColl)- duplicates collection by copying all documents to newColl; no indexes arecopied.

db.mycoll.convertToCapped(maxBytes)- calls {convertToCapped:'mycoll', size:maxBytes}} command

db.mycoll.dataSize()

db.mycoll.distinct(key ) - e.g. db.mycoll.distinct( 'x' )

db.mycoll.drop()drop the collection

db.mycoll.dropIndex(index)- e.g. db.mycoll.dropIndex( "indexName" ) or db.mycoll.dropIndex( {"indexKey" : 1 } )

db.mycoll.dropIndexes()

db.mycoll.ensureIndex(keypattern[,options])- options is an object with these possible fields: name, unique, dropDups

db.mycoll.reIndex()

db.mycoll.find([query],[fields])- query is an optional query filter. fields is optional set of fields toreturn.

e.g. db.mycoll.find( {x:77} , {name:1, x:1} )

db.mycoll.find(...).count()

db.mycoll.find(...).limit(n)

db.mycoll.find(...).skip(n)

db.mycoll.find(...).sort(...)

db.mycoll.findOne([query])

db.mycoll.findAndModify({ update : ... , remove : bool [, query: {}, sort: {}, 'new': false] } )

db.mycoll.getDB()get DB object associated with collection

db.mycoll.getPlanCache()get query plan cache associated with collection

db.mycoll.getIndexes()

db.mycoll.group({ key : ..., initial: ..., reduce : ...[, cond: ...] } )

db.mycoll.insert(obj)

db.mycoll.mapReduce(mapFunction , reduceFunction , <optional params> )

db.mycoll.aggregate([pipeline], <optional params> ) - performs an aggregation on acollection; returns a cursor

db.mycoll.remove(query)

db.mycoll.renameCollection(newName , <dropTarget> ) renames the collection.

db.mycoll.runCommand(name , <options> ) runs a db command with the given name where the firstparam is the collection name

db.mycoll.save(obj)

db.mycoll.stats()

db.mycoll.storageSize()- includes free space allocated to this collection

db.mycoll.totalIndexSize()- size in bytes of all the indexes

db.mycoll.totalSize()- storage allocated for all data and indexes

db.mycoll.update(query,object[, upsert_bool, multi_bool]) - instead of two flags, you can pass anobject with fields: upsert, multi

db.mycoll.validate(<full> ) - SLOW

db.mycoll.getShardVersion()- only for use with sharding

db.mycoll.getShardDistribution()- prints statistics about data distribution in the cluster

db.mycoll.getSplitKeysForChunks(<maxChunkSize> ) - calculates split points over all chunks and returnssplitter function

db.mycoll.getWriteConcern()- returns the write concern used for any operations on this collection,inherited from server/db if set

db.mycoll.setWriteConcern(<write concern doc> ) - sets the write concern for writes to thecollection

db.mycoll.unsetWriteConcern(<write concern doc> ) - unsets the write concern for writes to thecollection

> db.mycoll.find().help()

find() modifiers

.sort({...} )

.limit(n )

.skip(n )

.count(applySkipLimit)- total # of objects matching query. by default ignores skip,limit

.size()- total # of objects cursor would return, honors skip,limit

.explain([verbose])

.hint(...)

.addOption(n)- adds op_query options -- see wire protocol

._addSpecial(name,value) - http://dochub.mongodb.org/core/advancedqueries#AdvancedQueries-Metaqueryoperators

.batchSize(n)- sets the number of docs to return per getMore

.showDiskLoc()- adds a $diskLoc field to each returned object

.min(idxDoc)

.max(idxDoc)

.comment(comment)

.snapshot()

.readPref(mode,tagset)

Cursor methods

.toArray()- iterates through docs and returns an array of the results

.forEach(func )

.map(func )

.hasNext()

.next()

.objsLeftInBatch()- returns count of docs left in current batch (when exhausted, a new getMorewill be issued)

.itcount()- iterates through documents and counts them

.pretty()- pretty print each document, possibly over multiple lines

> rs.help()

rs.status() { replSetGetStatus : 1 }checks repl set status

rs.initiate() { replSetInitiate : null }initiates set with default settings

rs.initiate(cfg) { replSetInitiate : cfg }initiates set with configuration cfg

rs.conf() get the currentconfiguration object from local.system.replset

rs.reconfig(cfg) updates the configuration of arunning replica set with cfg (disconnects)

rs.add(hostportstr) add a new member to the set withdefault attributes (disconnects)

rs.add(membercfgobj) add a new member to the set withextra attributes (disconnects)

rs.addArb(hostportstr) add a new member which isarbiterOnly:true (disconnects)

rs.stepDown([secs]) step down as primary (momentarily)(disconnects)

rs.syncFrom(hostportstr) make a secondary to sync from the givenmember

rs.freeze(secs) make a node ineligible tobecome primary for the time specified

rs.remove(hostportstr) remove a host from the replica set(disconnects)

rs.slaveOk() shorthand fordb.getMongo().setSlaveOk()

rs.printReplicationInfo() check oplog size and time range

rs.printSlaveReplicationInfo() check replica set members and replication lag

db.isMaster() check who is primary

reconfigurationhelpers disconnect from the database so the shell will display

anerror, even if the command succeeds.

seealso http://<mongod_host>:28017/_replSet for additional diagnostic info

mongos> sh.help()

sh.addShard(host ) server:portOR setname/server:port

sh.enableSharding(dbname) enables sharding on thedatabase dbname

sh.shardCollection(fullName,key,unique) shards the collection

sh.splitFind(fullName,find) splits the chunk that find is inat the median

sh.splitAt(fullName,middle) splits the chunk that middle isin at middle

sh.moveChunk(fullName,find,to) move the chunk where 'find' is to'to' (name of shard)

sh.setBalancerState(<bool on or not> ) turns thebalancer on or off true=on, false=off

sh.getBalancerState() return true if enabled

sh.isBalancerRunning() return true if the balancerhas work in progress on any mongos

sh.addShardTag(shard,tag) adds the tag to the shard

sh.removeShardTag(shard,tag) removes the tag from the shard

sh.addTagRange(fullName,min,max,tag) tags the specified range of the givencollection

sh.status() prints a generaloverview of the cluster

《NoSQL數據庫入門》

《MongoDB權威指南》

《深入學習MongoDB》

學習模式:

基本概念;

安裝;

數據類型與數據模型;

與應用程序對接;

shell操作命令;

主從複製;

分片;

管理維護;

應用案例;

mongodb:

面向文檔的NoSQL;

名稱源自humongous(巨大無比);

C++編寫,支持linux/windows(32bit/64bit),solaris64bit,OS X64bit;

10gen(雲平臺)研發的面向文檔的數據庫(2007年10月,mongodb由10gen團隊所發展,2009年2月首度推出),目前最新版3.4.2;

開源軟件,GPL許可,也有10gen頒發的商業許可(具有商業性質的企業級支持、培訓和諮詢服務);

https://repo.mongodb.com/yum/redhat/

mongodb是NoSQL中發展最好的一款,最接近關係型DB,並且最有可能取而代之它;

mongodb基本不需要多做做優化,只要掌握索引就行,它自身的自動分片集羣會化解壓力;

mongodb查詢語句是在java script基礎上發展而來;

LAMP-->LNMP(nginx,mongodb,python)

注:

hadoop和redis是由個人開發的,mongodb是由公司開發的;

技術特點:

面向文檔的存儲引擎,可方便支持非結構化數據;

全面的索引支持,可在任意屬性上建立索引;

數據庫本身內置的複製與高可用;

數據庫本身支持的自動化分片集羣;

豐富的基於文檔的查詢功能;

原子化的查詢操作;

支持map/reduce;

GridFS;

非結構化數據(舉例:調查表,公司設備管理):

不能確定表的列結構的數據(而SQL,sturcture query language結構化查詢語言,先確定表結構即列);

誤區(認爲多媒體數據、大數據是非結構化數據);

非結構化的煩惱(無法固定模式/模型;數據結構持續變化中;數據庫管理員和開發人員的壓力被非必須的擴大);

文檔(行)

可理解爲調查表,一行是一個調查表,由多個"key:value"(“屬性名:值”)組成,在多行中,可給不固定的key(屬性)加上索引,value(值)有數據類型區分;

舉例:

{"foo":3,"greeting":"Hello,world!"}

數值:

{"foo":3}

字符串:

{"foo":"3"}

區分大小寫:

{"foo":3}

{"Foo":3}

key不能重複:

{"greeting":"Helloworld!","greeting":"Hello,MongoDB!"}

文檔中可以嵌入文檔

集合:

集合就是一組文檔;

文檔類似於關係庫裏的行;

集合類似於關係庫裏的表;

集合是無模式的,即集合中的文檔可以五花八門,無需固定結構;

集合的命名;

數據庫:

數據庫是由多個集合組成;

數據庫的命名與命名空間規則,見《MongoDB the definitive guide.pdf》;

特殊數據庫;

注:

集合的命名:

a collection is identified by itsname.collection names can be any utf-8 string,with a few resrictions:

1.the empty string("") is not avalid collection name;

2.collection names may not contain thecharacter \0(the null character) because this delineates the end of acollection name;

3.you should not create any collectionsthat start with system,a prefix reserved for system collections,for example,thesystem .users collection contains the database's users,and the systemnamespaces collection contains information about all of the databases'scollections.

4.user-created collections should notcontain the reserved characters $ in the name. the various drivers avaiousdrivers available for the database do support using $ in collection namesbecause some system-generated collections contain it. you should not use $ in aname unless you are accessing one of these collections.

數據庫命名:

1.the empty string("") is not avalid database name;

2.a database name cannot contain any ofthese characters:' '(single space)、-、$、/、\、\0(the nullcharacter);

3.database names should be all lowercase;

4.database names are limited to a maximumof 64bytes;

缺省庫:

admin(this is the "root" database,in terms of authentication.ifa user is added to the admin database,the user automatically inheritspermissions for all databases,there are also certain server-wide commands thatcan be run only from the admin database; such as listing all of the databasesor shutting down server);

local(the database will never be replicated and can be used to store anycollections that should be local to a single server(see chapter 9 for moreinformation about replication and the local database));

config(when mongo is being used in a sharded set up (see chapter 10),theconfig database is used internally to store information about the shards);

注:

condor cluster,禿鷹羣,2010-12-7,美國空軍研究實驗室近日研製出一臺號稱美國國防部速度最快的超級計算機--“禿鷹羣”。這臺超級計算機由1760臺索尼PS3遊戲機組成,運算性能可達每秒500萬億次浮點運算,它也是世界上第33大超級計算機;“禿鷹羣”超級計算機項目開始於4年前,當時每臺PS遊戲機價值約400美元,組成超級計算機核心的PS3遊戲機共花費大約爲200萬美元,美國空軍研究實驗室高性能計算部主任馬克-巴內爾表示,這樣的花費只相當於利用成品計算機部件組裝的同等系統花費的5%到10%;基於PS3遊戲機的超級計算機的另一個優點就是它的能效,它所消耗的能量僅僅相當於類似超級計算機的10%。除了PS3遊戲機外,這臺超級計算機還包括了168個獨立的圖像處理單元和84個協調服務器;

“天河一號”超級計算機由中國國防科學技術大學研製,部署在天津的國家超級計算機中心,其測試運算速度可以達到每秒2570萬億次。2009年10月29日,作爲第一臺國產千萬億次超級計算機“天河一號”在湖南長沙亮相;該超級計算機峯值性能速度能達到每秒1206萬億次雙精度浮點數,而LINPACK實測性能速度達到每秒563.1萬億次;10億人民幣;

mongodb查詢:

操作 | SQL | mongodb |

所有記錄 | SELECT * FROM users; | >db.users.find() |

age=33的記錄 | >SELECT * from users WHERE age=33; | >db.users.find({age:33}); |

子鍵(字段)篩選 | >SELECT a,b from users WHERE age=33; | db.users.find({age:33},{a:1,b:1}); |

排序 | >SELECT * FROM users WHERE age=33 ORDER BY name; | db.users.find({age:33}).sort({name:1}); |

比大小 | >SELECT * FROM users WHERE age>33; | db.users.find({age:{$gt:33}}); |

正則(模糊匹配) | >SELECT * FROM users WHERE name LIKE "Joe%"; | >db.users.find({name:/^Joe/}); |

忽略,限制 | >SELECT * FROM users LIMIT 10 SKIP 20; | >db.users.find().limit(10).skip(20); |

or操作 | >SELECT * FROM users WHERE a=1 or b=2; | >db.users.find({$or:[{a:1},{b:2}]); |

僅返回1條 | >SELECT * FROM users LIMIT 1; | >db.users.findOne(); |

distinct聚合 | >SELECT DISTINCT last_name FROM users; | db.users.distinct("Last_name"); |

count聚合 | >SELECT COUNT(AGE) from users; | db.users.find({age:{"$exists":true}}).count(); |

查詢計劃 | >EXPLAIN SELECT * FROM users WHERE z=3; | >db.users.find({z:3}).explain(); |