LVS簡介:

LVS集羣有DR、TUN、NAT三種配置模式,可以對www服務、FTP服務、MAIL服務等做負載均衡,下面通過搭建www服務的負載均衡實例,講述基於DR模式的LVS集羣配置

Director-Server: LVS的核心服務器,作用類似於一個路由器,含有爲LVS功能完成的路由表,通過路由表把用戶的請求分發給服務器組層的應用服務器(Real_Server),同時監控Real-servers

,在Real-Server不可用時,將其從LVS路由表中剔除,再恢復時,重新加入。

Real-Server:由web服務器,mail服務器,FTP服務器,DNS服務器,視頻服務器中的一個或多個,每個Real-Server通過LAN分佈或WAN分佈相連接。實際過程中DR,也可以同時兼任Real-server

LVS的三種負載均衡方式:

NAT:調度器將請求的目標地址和目標端口,改寫成Real-server的地址和端口,然後發送到選定的Real-server上,Real-Server端將數據返回給用戶時,需要再次經過DR將報文的源地址和源端口改成虛擬IP的地址和端口,然後把數據發送給用戶,完成整個負載調度過程。

弊端:調度器負載大

TUN: IP隧道方式,調度器將請求通過IP隧道轉發到Real-server,而Real-server直接響應用戶的請求,不再經調度器。D與R可不同網絡,TUN方式中,調度器將只處理用戶的報文請求,提高吞吐量。

弊端:有IP隧道開銷

DR:直接路由技術實現虛擬服務器,DR通過改寫請求的MAC,將請求發送給Real-server,而Real-server直接響應給Client,免去了隧道開銷。三種方式中,效果最好。

弊端:要求D與R同在一個物理網段

LVS的負載調度方式:

LVS是根據Real-Server的負載情況,動態的選擇Real-server響應,IPVS實現了8種負載調度算法,這裏講述4種調度算法:

rr 輪叫調度:

平等分配R,不考慮負載。

wrr 加權輪叫調度:

設置高低權值,分配給R。

lc 最少連接調度:

動態分配給已建立連接少的R上。

wlc 加權最少連接調度:

動態的設置R的權值,在分配新連接請求時,儘可能使R的已建立連接和R的權值成正比。

環境介紹:

本例使用三臺主機,一臺Director-server(調度服務器),兩臺web real_server(web服務器)

DS的真實IP:10.2.16.250

VIP:10.2.16.252

RealServer——1 的真實IP: 10.2.16.253

RealServer——2 的真實IP: 10.2.16.254

注意:本例採用LVS的DR模式,使用rr輪詢來做負載均衡

用keepalived方式安裝配置LVS

1、安裝keepalived

[root@proxy ~]# tar -zxvf keepalived-1.2.13.tar.gz -C ./

[root@proxy ~]# cd keepalived-1.2.13

[root@proxy keepalived-1.2.13]# ./configure --sysconf=/etc/ --with-kernel-dir=/usr/src/kernels/2.6.32-358.el6.x86_64/

[root@proxy keepalived-1.2.13]# make && make install

[root@proxy keepalived-1.2.13]# ln /usr/local/sbin/keepalived /sbin/

2、安裝LVS

yum -y install ipvsadm*

開啓路由轉發功能:

[root@proxy ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

[root@proxy ~]# sysctl -p

3、在調度服務器上配置keepalived和LVS

[root@proxy ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0 #LVS的真實物理網卡

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #LVS的VIP

10.2.16.252

}

}

virtual_server 10.2.16.252 80 { #定義對外提供服務的LVS的VIP以及port

delay_loop 6 #設置運行情況檢查時間,單位是s

lb_algo rr #設置負載調度算法,RR爲輪詢調度

lb_kind DR #設置LVS的負載均衡機制,NAT/TUN/DR 三種模式

nat_mask 255.255.255.0

# persistence_timeout 50 #會話保持時間,單位是s,對動態網頁的session共享有用

protocol TCP #指定轉發協議類型

real_server 10.2.16.253 80 { #指定realserver的真實IP和port

weight 1 #設置權值,數字越大分配的機率越高

TCP_CHECK { #realserver的狀態檢測部分

connect_timeout 3 #表示3秒無響應超時

nb_get_retry 3 #重試次數

delay_before_retry 3 #重試間隔

}

}

real_server 10.2.16.254 80 { #配置服務節點2

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

4、配置Real_Server

由於採用的是DR方式調度,Real_Server會以LVS的VIP來直接回復Client,所以需要在Real_Server的lo上開啓LVS的VIP來與Client建立通信

1、此處寫了一個腳本來實現VIP這項功能:

[root@web-1 ~]# cat /etc/init.d/lvsrs

#!/bin/bash

#description : start Real Server

VIP=10.2.16.252

./etc/rc.d/init.d/functions

case "$1" in

start)

echo " Start LVS of Real Server "

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore #註釋:這四句目的是爲了關閉ARP廣播響應,使VIP不能向網絡內發送廣播,以防止網絡出現混亂

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)

/sbin/ifconfig lo:0 down

echo "close LVS Director server"

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

2、啓動腳本:

[root@web-1 ~]# service lvsrs start

Start LVS of Real Server

3、查看lo:0虛擬網卡的IP:

[root@web-1 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:A2:C4:9F

inet addr:10.2.16.253 Bcast:10.2.16.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fea2:c49f/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:365834 errors:0 dropped:0 overruns:0 frame:0

TX packets:43393 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:33998241 (32.4 MiB) TX bytes:4007256 (3.8 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:17 errors:0 dropped:0 overruns:0 frame:0

TX packets:17 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1482 (1.4 KiB) TX bytes:1482 (1.4 KiB)

lo:0 Link encap:Local Loopback

inet addr:10.2.16.252 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:16436 Metric:1

4、確保nginx訪問正常

[root@web-1 ~]# netstat -anptul

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1024/nginx

5、在real_server2上,執行同樣的4步操作。

6、開啓DR上的keepalived:

[root@proxy ~]# service keepalived start

Starting keepalived: [ OK ]

查看keepalived啓動日誌是否正常:

[root@proxy ~]# tail -f /var/log/messeges

May 24 10:06:57 proxy Keepalived[2767]: Starting Keepalived v1.2.13 (05/24,2014)

May 24 10:06:57 proxy Keepalived[2768]: Starting Healthcheck child process, pid=2770

May 24 10:06:57 proxy Keepalived[2768]: Starting VRRP child process, pid=2771

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Netlink reflector reports IP 10.2.16.250 added

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Netlink reflector reports IP 10.2.16.250 added

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Netlink reflector reports IP fe80::20c:29ff:fee6:ce1a added

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Registering Kernel netlink reflector

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Registering Kernel netlink command channel

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Netlink reflector reports IP fe80::20c:29ff:fee6:ce1a added

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Registering Kernel netlink reflector

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Registering Kernel netlink command channel

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Registering gratuitous ARP shared channel

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Opening file '/etc/keepalived/keepalived.conf'.

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Configuration is using : 63303 Bytes

May 24 10:06:57 proxy Keepalived_vrrp[2771]: Using LinkWatch kernel netlink reflector...

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Opening file '/etc/keepalived/keepalived.conf'.

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Configuration is using : 14558 Bytes

May 24 10:06:57 proxy Keepalived_vrrp[2771]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Using LinkWatch kernel netlink reflector...

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Activating healthchecker for service [10.2.16.253]:80

May 24 10:06:57 proxy Keepalived_healthcheckers[2770]: Activating healthchecker for service [10.2.16.254]:80

May 24 10:06:58 proxy Keepalived_vrrp[2771]: VRRP_Instance(VI_1) Transition to MASTER STATE

May 24 10:06:59 proxy Keepalived_vrrp[2771]: VRRP_Instance(VI_1) Entering MASTER STATE

May 24 10:06:59 proxy Keepalived_vrrp[2771]: VRRP_Instance(VI_1) setting protocol VIPs.

May 24 10:06:59 proxy Keepalived_vrrp[2771]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 10.2.16.252

May 24 10:06:59 proxy Keepalived_healthcheckers[2770]: Netlink reflector reports IP 10.2.16.252 added

May 24 10:07:04 proxy Keepalived_vrrp[2771]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 10.2.16.252

一切正常!

7、查看LVS的路由表:

[root@proxy ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.2.16.252:80 rr

-> 10.2.16.253:80 Route 1 0 0

-> 10.2.16.254:80 Route 1 0 0

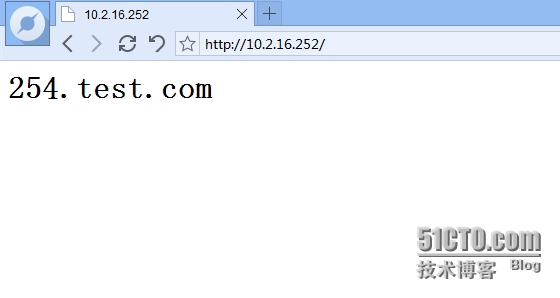

8、測試,打開網頁,輸入 http://10.2.16.252/

能正常出現兩臺負載均衡服務器的網頁,則證明已經成功!

9、測試其中一臺Real-Server服務掛掉

(1)把254的nginx進程殺掉,再開啓。

(2)查看keepalived的日誌:

[root@proxy ~]#tail -f /var/log/messeges

May 24 10:10:55 proxy Keepalived_healthcheckers[2770]: TCP connection to [10.2.16.254]:80 failed !!!

May 24 10:10:55 proxy Keepalived_healthcheckers[2770]: Removing service [10.2.16.254]:80 from VS [10.2.16.252]:80

May 24 10:10:55 proxy Keepalived_healthcheckers[2770]: Remote SMTP server [127.0.0.1]:25 connected.

May 24 10:10:55 proxy Keepalived_healthcheckers[2770]: SMTP alert successfully sent.

May 24 10:11:43 proxy Keepalived_healthcheckers[2770]: TCP connection to [10.2.16.254]:80 success.

May 24 10:11:43 proxy Keepalived_healthcheckers[2770]: Adding service [10.2.16.254]:80 to VS [10.2.16.252]:80

May 24 10:11:43 proxy Keepalived_healthcheckers[2770]: Remote SMTP server [127.0.0.1]:25 connected.

May 24 10:11:43 proxy Keepalived_healthcheckers[2770]: SMTP alert successfully sent.

可見keepalive的反應速度還是非常快的!

到此,LVS配置全部結束,圓滿成功!