1.1 Prometheus原理

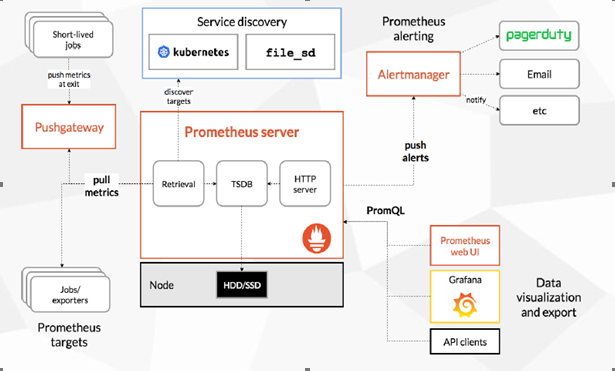

1.1.1 Prometheus架構

數據採集模塊:最左邊的Prometheus target就是數據採集對象,而Retrieval則負責採集這些數據,並同時支持push,pull兩種採集方式。

Pull模式:有服務端的採集模式來觸發,只要採集目標,即target提供了http接口就可以採集,也是最常用的採集方式。後面描述的基本都是採用這種方式採集。

Push模式:由各個採集目標主動向push gateway推送指標,再由服務器端拉取。

存儲模塊:爲了保證監控數據持久化,由TSDB進行存儲,是專門爲時間序列數據設置的數據庫,能夠以時間爲索引進行存儲。

數據查詢和處理模塊:TSDB在提供存儲的同時還提供了數據查詢和處理的基本功能,就是PromSQL,這也是告警系統以及可視化頁面的基礎。

告警模塊:由Alertmanager進行告警,能夠動態進行分組,靜默,抑制,減少告警數量,能夠支持多種告警方式。

可視化模塊:Grafana實現的多維度可視化界面。

1.1.2 怎麼來監控?

Prometheus通過push,pull兩種數據採集方式採集數據,通過在配置文件中定義的相關採集項,對k8s不同組件甚至開源應用如mysql,redis進行數據採集,將數據存儲在prometheus server中的TSDB時序數據庫中,當rules在指定時間段被觸發後推送給Alertmanager進行報警處理,Grafana通過PromQL拉取監控數據進行可視化圖表展示。

ps:node_exporter採集器相關地址

使用文檔:https://prometheus.io/docs/guides/node-exporter/

GitHub:https://github.com/prometheus/node_exporter

exporter列表:https://prometheus.io/docs/instrumenting/exporters/

1.1.3 Prometheus標識

Prometheus將所有數據存儲爲時間序列;具有相同度量名稱以及標籤屬於同一個指標。

每個時間序列都由度量標準名稱和一組鍵值對(也成爲標籤)唯一標識。

時間序列格式:

<metric name>{<label name>=<label value>, ...}

示例如下:

container_cpu_user_seconds_total{beta_kubernetes_io_arch="amd64",beta_kubernetes_io_os="linux",container_name="POD",id="/kubepods/besteffort/pod011eaa38-af5c-11e8-a65f-801844f11504/b659e04fa88e0f3080612d6479dec32d41afe632efd4f43f80714245be899d14",image="k8s.gcr.io/pause-amd64:3.1",instance="h-ldocker-02",job="kubernetes-cadvisor",kubernetes_io_hostname="h-ldocker-02",name="k8s_POD_consul-client-0_plms-pro_011eaa38-af5c-11e8-a65f-801844f11504_0",namespace="plms-pro",pod_name="consul-client-0",rolehost="k8smaster"}

1.2 Prometheus容器化部署及應用

解壓k8s-prometheus-grafana.tar.gz,部署prometheus.svc.yml,rbac-setup.yaml, prometheus-rules.yaml ,configmap.yaml,prometheus.deploy.yaml。

①prometheus.svc:配置prometheus的nodePort爲30003,供外部訪問,調試Promsql 。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat prometheus.svc.yml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

②rbac-setup:配置prometheus用戶在k8s的組件訪問權限。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat rbac-setup.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

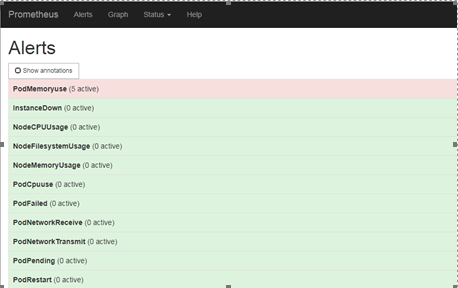

③prometheus-rules:設置prometheus的監控項。分爲general.rules,node.rules,分別監控k8s的pod以及node節點的數據。

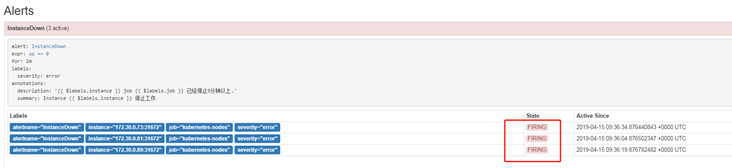

以下圖InstanceDown監控項爲例,監控的數據來自於”expr: up ==0“,“up“監控的是prometheus target中的組件健康狀態,如apiserver,當up==0時表示異常,當異常持續兩分鐘,即”for: 2m”,就會將異常數據傳遞給alertmanager進行分組報警處理。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat prometheus-rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 2m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已經停止5分鐘以上."

- alert: PodCpuuse

expr: sum(rate(container_cpu_usage_seconds_total{image!=""}[1m])) by (pod_name, namespace) / (sum(container_spec_cpu_quota{image!=""}/100000) by (pod_name, namespace)) * 100 > 80

for: 5m

labels:

severity: warn

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} CPU使用率過高"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} CPU使用率大於80%. (當前值:{{ $value }}%)"

- alert: PodMemoryuse

expr: sum(container_memory_rss{image!=""}) by(pod_name, namespace) / sum(container_spec_memory_limit_bytes{image!=""}) by(pod_name, namespace) * 100 != +inf > 80

for: 5m

labels:

severity: warn

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 內存使用率過高"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 內存使用率大於80%. (當前值:{{ $value }}%)"

- alert: PodFailed

expr: sum (kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

for: 1m

labels:

severity: error

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} pod status is Failed"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} pod status is Failed. (當前值:{{ $value }})"

- alert: PodPending

expr: sum (kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

for: 1m

labels:

severity: error

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} pod status is Pending"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} pod status is Pending. (當前值:{{ $value }})"

- alert: PodNetworkReceive

expr: sum (rate (container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 20000

for: 5m

labels:

severity: warn

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 接受到的網絡流量過大"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 接受到的網絡流量大於20MB/s. (當前值:{{ $value }}B/s)"

- alert: PodNetworkTransmit

expr: sum (rate (container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 20000

for: 5m

labels:

severity: warn

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 傳輸的網絡流量過大"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod_name }} 傳輸的網絡流量大於20MB/s. (當前值:{{ $value }}B/s)"

- alert: PodRestart

expr: sum (changes (kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 0

for: 1m

labels:

severity: warn

annotations:

summary: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} pod is restart"

description: "Namespaces: {{ $labels.namespace }} | PodName: {{ $labels.pod }} 在一分鐘內重啓. (當前值:{{ $value }})"

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分區使用率過高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分區使用大於80% (當前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 內存使用率過高"

description: "{{ $labels.instance }}內存使用大於80% (當前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 5m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率過高"

description: "{{ $labels.instance }}CPU使用大於60% (當前值: {{ $value }})"

④configmap.yaml:prometheus 配置文件,主要是去採集監控的時序數據,通過cadvisor,獲取容器的cpu,內存,網絡io等基本數據,通過kubernetes-node獲取node主機的相關監控數據,通過kubernetes-services-endpoint來獲取kube-state-metrics組件數據,configmap是以角色(role)來定義收集的,比如node,service,pod,endpoints,ingress等。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

rule_files: ##在配置文件中顯性指定prometheus rules文件路徑,需要掛載在deployment中;

- /etc/config/rules/*.rules

global:

scrape_interval: 15s ##prometheus採集數據間隔

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: kubernetes-nodes ##node-exporter採集node數據

scrape_interval: 30s

static_configs:

- targets:

- *

- job_name: 'kubernetes-nodes-kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-nodes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

alerting: ##alertmanager與prometheus集成配置

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

⑤部署prometheus deployment

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat prometheus.deploy.yml

---

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

- name: prometheus-rules ###指定prometheus rule監控項數據掛載,需要在configmap中指定rules路徑

mountPath: /etc/config/rules

- name: localtime

mountPath: /etc/localtime

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

hostPath:

path: /var/lib/docker/prometheus

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

- name: localtime

hostPath:

path: /etc/localtime

至此prometheus部署完成,訪問http://IP:30003

查看rules是否正常:

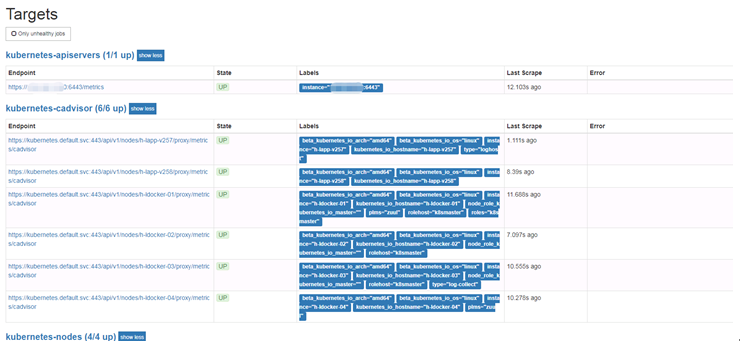

查看target是否正常:

1.3 部署node-exporter

node-exporter的部署在這裏採用二進制包的形式部署,因爲發現採用容器化部署node-exporter獲取到的數據不是很準確,所以在這裏採用二進制的方式,運行一下部署腳本即可,腳本如下,注意,需要在每個node節點執行一下腳本,啓動端口爲9100

#!/bin/bash

wget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

tar zxf node_exporter-0.17.0.linux-amd64.tar.gz

mv node_exporter-0.17.0.linux-amd64 /usr/local/node_exporter

cat <<EOF >/usr/lib/systemd/system/node_exporter.service

[Unit]

Description=https://prometheus.io

[Service]

Restart=on-failure

ExecStart=/usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable node_exporter

systemctl restart node_exporter

1.4 部署kube-state-metrics

Kubernetes集羣上Pod, DaemonSet, Deployment, Job, CronJob等各種資源對象的狀態需要監控,這也反映了使用這些資源部署的應用的狀態。但通過查看前面Prometheus從k8s集羣拉取的指標(這些指標主要來自apiserver和kubelet中集成的cAdvisor),並沒有具體的各種資源對象的狀態指標。對於Prometheus來說,當然是需要引入新的exporter來暴露這些指標,Kubernetes提供了一個kube-state-metrics正是我們需要。

部署kube-state-metrics-rbac.yaml進行授權認證

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

部署kube-state-metrics-service.yaml

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat kube-state-metrics-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

部署kube-state-metrics-deployment.yaml

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat kube-state-metrics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: **kube-state-metrics:v1.3.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: **addon-resizer:1.8.3

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

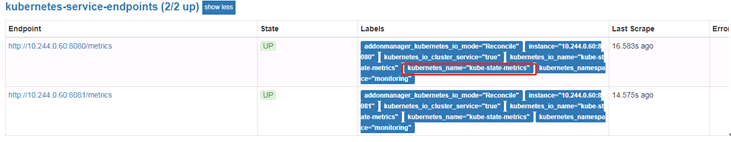

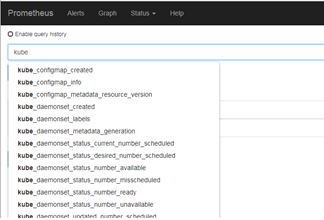

驗證:在prometheus上查看target是否正常獲取到,Grath頁面能夠獲取到kube相關配置數據,如下

1.5 PromSQL基本語法

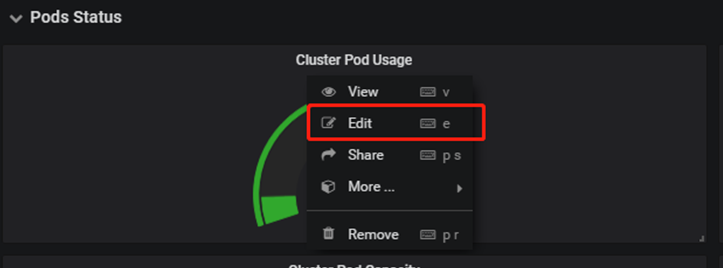

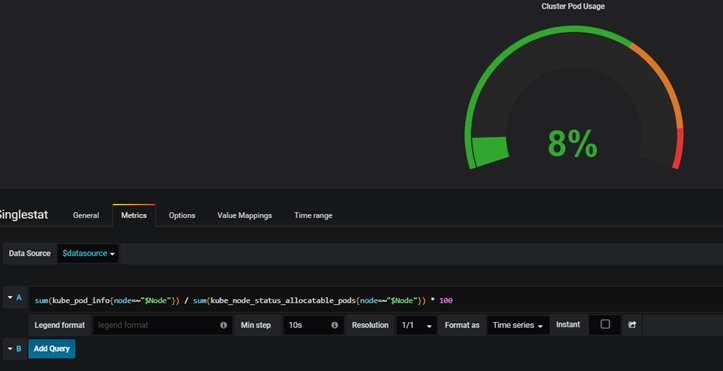

指標在grafana的體現:

查看某個指標的使用率,比如cpu使用率

用到rate函數,rate用來計算兩個間隔時間內發生的變化率。如

rate(指標名{篩選條件}[時間間隔])

這兩個函數在 promethues 中經常用來計算增量或者速率,在使用時需要指定時間範圍如[1m]

irate(): 計算的是給定時間窗口內的每秒瞬時增加速率。

rate(): 計算的是給定時間窗口內的每秒的平均值。

比如查看1分鐘內非idle的cpu使用率rate(node_cpu_seconds_total{mode!="idle"}[1m])

Promsql表達式需要在prometheus調試頁面調試好再放入grafana進行數據展示,在prometheus-rule中編寫的expr作爲監控項,其實就是grafana中的各個監控指標,稍加修改,或者直接挪用作爲prometheus監控指標。

Node節點監控表達式

CPU使用率:獲取5分鐘cpu每秒空閒的平均值,從而得出cpu使用率

100-(avg(irate(node_cpu_seconds_total{mode="idle"}[5m]))by(instance)*100)

內存使用率:將內存buffer,cache,free相加得出總的空閒內存,除以總內存大小,從而得出內存使用率

100-(node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes)/node_memory_MemTotal_bytes*100

磁盤使用率:同理可得

100-(node_filesystem_free_bytes{mountpoint="/",fstype=~"ext4|xfs"}/node_filesystem_size_bytes{mountpoint="/",fstype=~"ext4|xfs"}*100)

容器監控表達式

target組件的健康狀態:可以通過添加apiserver,scheduler,controller進行監控,1爲正常,0爲異常

up == 0

容器cpu使用率:獲取1分鐘內容器cpu使用值,除以容器cpu limit值,獲取到cpu使用率

sum(rate(container_cpu_usage_seconds_total{image!=""}[1m])) by (pod_name, namespace) / (sum(container_spec_cpu_quota{image!=""}/100000) by (pod_name, namespace)) * 100 > 80

容器內存使用率:容器實際申請的內存(RSS)除以限定的內存值,獲取到內存使用率

sum(container_memory_rss{image!=""}) by(pod_name, namespace) / sum(container_spec_memory_limit_bytes{image!=""}) by(pod_name, namespace) * 100 != +inf > 80

容器狀態爲Failed,0爲正常,1爲Failed

sum (kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

容器狀態爲Pending,0爲正常,1爲異常

sum (kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

容器 network 入io,若超過20MB/s則告警(視情況而定)

sum (rate (container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 20000

容器 network 出io,若超過20MB/s則告警(視情況而定)

sum (rate (container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 20000

容器是否自動重啓,如內存溢出等情況

sum (changes (kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 0

2.1 Grafana容器化部署及應用

部署grafana-svc.yaml

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat grafana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30002

selector:

app: grafana

component: core

部署grafana-deploy.yaml使用的是5.4.2較新版本

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:5.4.2

name: grafana-core

imagePullPolicy: IfNotPresent

# env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 2000m

memory: 2000Mi

requests:

cpu: 1000m

memory: 2000Mi

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

# does not really work, because of template variables in exported dashboards:

# - name: GF_DASHBOARDS_JSON_ENABLED

# value: "true"

readinessProbe:

httpGet:

path: /login

port: 3000

# initialDelaySeconds: 30

# timeoutSeconds: 1

volumeMounts:

- name: grafana-storage

mountPath: /var/lib/grafana

volumes:

- name: grafana-storage

hostPath:

path: /var/lib/grafana

type: DirectoryOrCreate

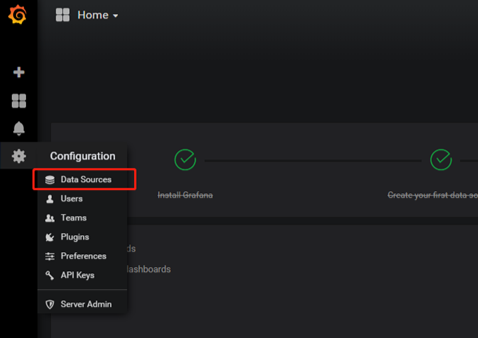

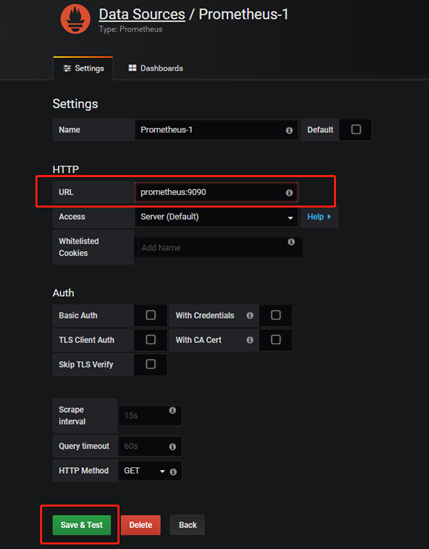

2.2 Grafana 配置Prometheus數據源

訪問地址:IP: 30002

第一次登陸grafana默認用戶名密碼均爲admin

配置數據源

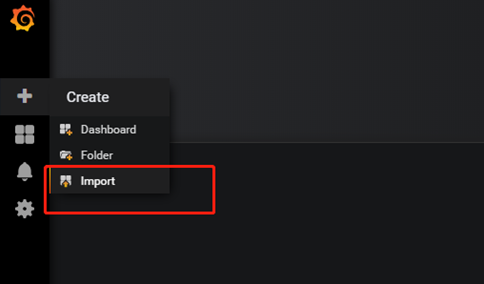

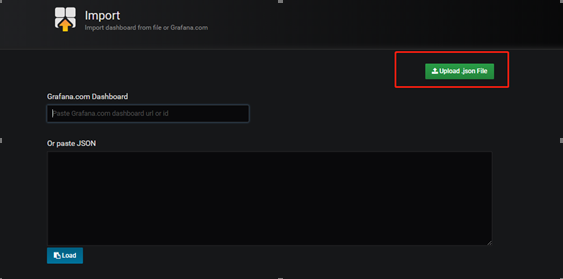

2.3 Grafana加載自定義的監控模板

導入已經提前定義好的模板

Kubernetes AllPod monitoring.json:監控所有pod,包括歷史pod。

Kubernetes 集羣資源監控.json:監控當前在線pod。

Kubernetes Node監控.json:監控node節點。

也可以輸入模板編號,在線獲取模板,推薦使用

集羣資源監控:3119

資源狀態監控:6417

Node監控:9276

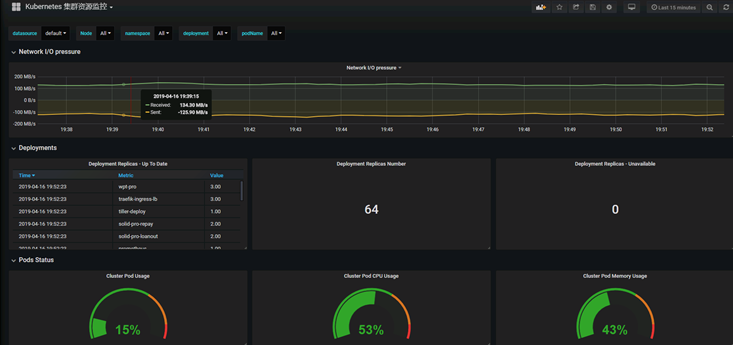

配置好的grafana頁面如下

3.1 Alertmanager簡介

Alertmanager 主要用於接收 Prometheus 發送的告警信息,它支持豐富的告警通知渠道,而且很容易做到告警信息進行去重,靜默,分組等,將同類型的告警信息進行分類,防止告警信息轟炸,儘量精簡告警的條數。

3.2 Alertmanager容器化部署及應用

部署alertmanager-service.yaml:設置nodeport供外部訪問。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Alertmanager"

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 9093

nodePort: 30004

selector:

k8s-app: alertmanager

#type: "ClusterIP"

部署alertmanager-templates.yaml:企業微信報警的短信模板,若使用默認模板,太過雜亂,不夠精簡,需要將該配置掛載至alertmanager-server中才會生效。告警模板中的變量都是通過調用prometheus pull到的metrics數據值獲取到的

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat alertmanager-templates.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-templates

namespace: kube-system

data:

wechat.tmpl: |

{{ define "wechat.default.message" }}

{{ if gt (len .Alerts.Firing) 0 -}}

Alerts Firing:

{{ range .Alerts}}

告警級別: {{ .Labels.severity }}

告警類型: {{ .Labels.alertname }}

故障主機: {{ .Labels.instance }}

告警主題: {{ .Annotations.summary }}

告警詳情: {{ .Annotations.description }}

觸發時間: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

{{- end }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

Alerts Resolved:

{{ range .Alerts}}

告警級別: {{ .Labels.severity }}

告警類型: {{ .Labels.alertname }}

故障主機: {{ .Labels.instance }}

告警主題: {{ .Annotations.summary }}

觸發時間: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

恢復時間: {{ .EndsAt.Format "2006-01-02 15:04:05" }}

{{- end }}

{{- end }}

{{- end }}

部署alertmanager-configmap.yaml:設置告警渠道,在這裏配置了郵件告警,企業微信告警。在此設置告警時間間隔,分組,分流。

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m ##多久沒有收到告警,默認告警已恢復

smtp_smarthost: '*' ##注意:這裏的mail-server要加端口,要不然發不出去

smtp_from: '*'

smtp_auth_username: '*'

smtp_auth_password: '*'

templates:

- '/etc/alertmanager-templates/*.tmpl' ##指定告警模板路徑

receivers:

- name: default-receiver

email_configs:

- to: 'wujqc@*'

send_resolved: true ##是否發送告警恢復郵件

wechat_configs:

- send_resolved: true ##是否發送告警恢復微信

agent_id: '*' ##應用頁面上的配置

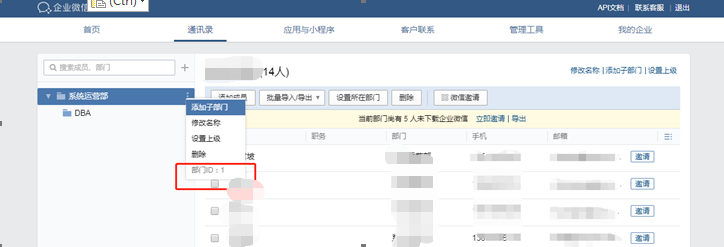

to_party: '1' ##企業微信後臺->通訊錄->部門ID

corp_id: '*' ##應用頁面上的配置

api_secret: '*' ##應用頁面上的配置

route:

group_interval: 10s ##第一次以後的告警分組要等到多久繼續

group_by: ['alertname'] ##根據prometheus-rule的alertname進行告警分組

group_wait: 10s ##第一次告警分組要等待多久

receiver: default-receiver ##告警組名稱要與receivers保持一致

repeat_interval: 5m ##相同告警間隔多久發送下一條

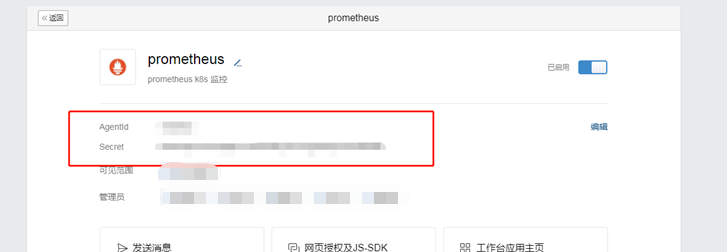

agent_id,api_secret:

to_party:

corp_id:

Alertmanager告警可以根據標籤值進行分流,如mysql告警分發到DBA組,架構告警發到架構組。實現方式如下,通過routes 的labels進行正則匹配,實現路由轉發,若都不匹配則使用默認的route告警組default-receiver進行告警

route:

receiver: 'default-receiver'

group_wait: 30s

group_interval: 5m

repeat_interval: 4h

group_by: [cluster, alertname] # All alerts that do not match the following child

routes

# will remain at the root node and be dispatched to 'default-receiver'.

routes:

# All alerts with service=mysql or service=cassandra

# are dispatched to the database pager.

- receiver: 'database-pager'

group_wait: 10s

match_re: ###正則匹配labels來判斷使用哪個路由進行報警

service: mysql|cassandra

部署alertmanager-deployment.yaml:部署alertmanager-server,掛載alertmanager configmap進行告警設置,在這裏還在alertmanager-server pod創建的基礎上加了配置文件熱加載的鏡像,保證重新加載alertmanager configmap配置時不需要重啓alertmanager pod。

注意,這裏還需要掛載alertmanager-templates,否則告警模板不生效

[root@PH-K8S-M01 k8s-prometheus-grafana]# cat alertmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

k8s-app: alertmanager

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.14.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

- name: templates-volume

mountPath: /etc/alertmanager-templates ##模板掛載點要與configmap一致

- name: localtime

mountPath: /etc/localtime

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

- name: prometheus-alertmanager-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

emptyDir: {}

- name: templates-volume

configMap:

name: alertmanager-templates ###掛載告警模板

- name: localtime

hostPath:

path: /etc/localtime

驗證:可以手動觸發prometheus-rule加載的配置,進行報警驗證

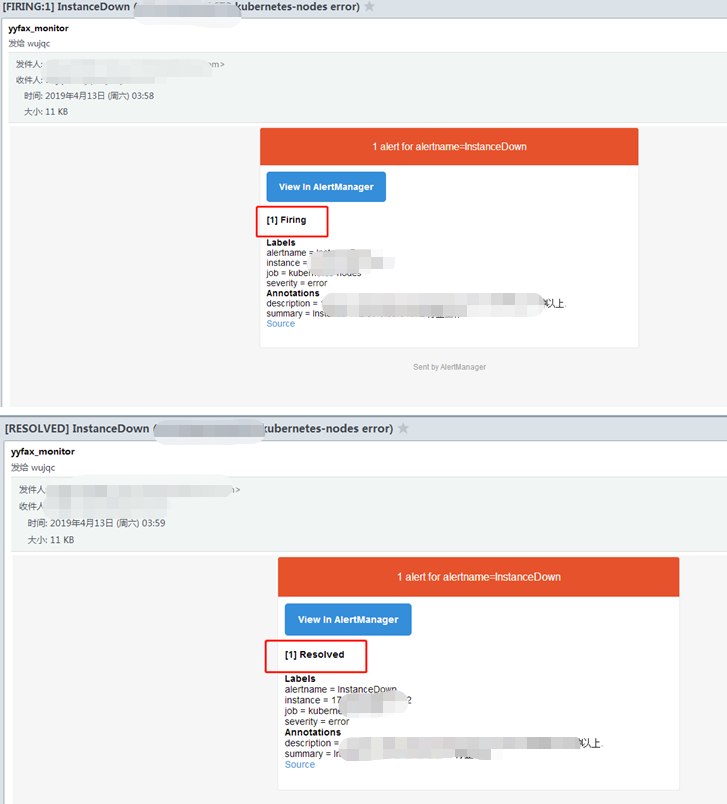

郵件報警:

Fring表示觸發告警

Resolved表示告警恢復

3.3 Alertmanager告警狀態

Inactive:這裏什麼都沒有發生。

Pending:已觸發閾值,但未滿足告警持續時間

Firing:已觸發閾值且滿足告警持續時間。警報發送給接受者。

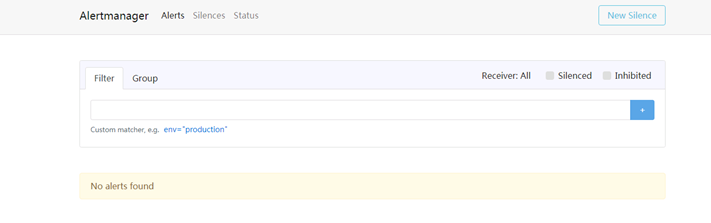

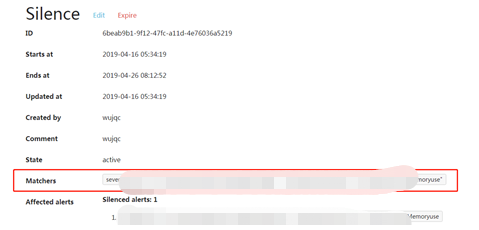

3.4 Alertmanager告警靜默設置

訪問alertmanager後臺,訪問地址

http://IP:30004

右上角new silence用於手動設置靜默,對一些需要忽略的告警設置維護模式,根據labels進行正則匹配設置,如