一、前言

2013年下半年大部時候都用在LVS實施上面了,一路摸爬滾打走來,遇到各種問題,感謝同事們對我的幫助和指導,感謝領導對我的信任,本文總結一下lvs集羣(ospf+fullnat)的詳細部署情況以及需要注意的問題點,先大概說一下LVS在我們公司的應用情況吧,LVS在我們公司走過了以下三個階段:

階段一,一個業務一套LVS調度(主備模式),優缺點如下:

優點:業務和業務之間隔離,A業務有問題不會影響B業務

缺點:1、管理不方便,2、LB多了虛擬路由ID衝突導致業務異常,3、業務量足夠大LB成爲瓶頸

階段二,一個IDC一套LVS調度(主備模式),優缺點如下:

優點、業務統一集中管理

缺點:1、A業務突發上流(超過LB的承受能力)會影響整個集羣上的業務,2、LB很容易成爲瓶頸

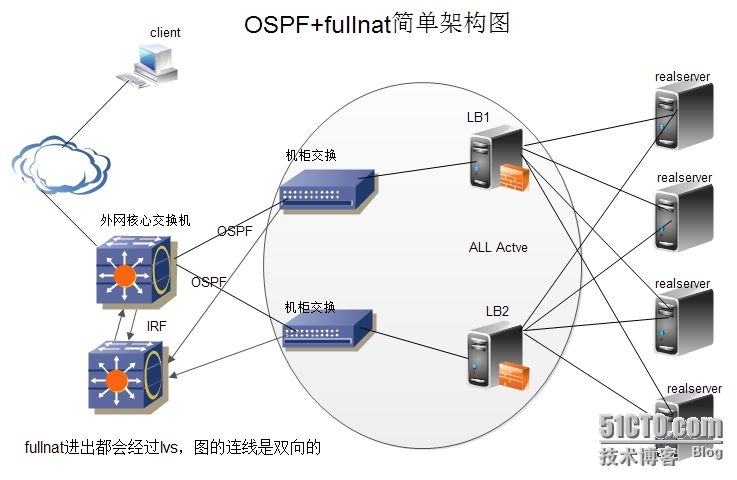

階段三,一個IDC一套調度(ospf+fullnat集羣模式),優缺點如下:

優點:1、LB調度機自由伸縮,橫向線性擴展(最多機器數受限於三層設備允許的等價路由數目 ),2、業務統一集中管理,3、LB資源全利用,All Active。不存在備份機

缺點:部署相對比較複雜

二、環境說明

2、fullnat是淘寶開源的一種lvs轉發模式,主要思想:引入local address(內網ip地址),cip-vip轉換爲lip->rip,而 lip和rip均爲IDC內網ip,可以跨vlan通訊,這剛好符合我們的需求,因爲我們的內網是劃分了vlan的。

3、環境說明

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | 軟件環境:系統版本:centos6.4keepalived版本:v1.2.2ospfd版本:version 0.99.20zebra版本: version 0.99.20交換機:外網核心交換IP:114.11x.9x.1內網核心交換IP:10.10.2.1LB1外網機櫃交換IP:114.11x.9x.122LB2外網機櫃交換IP:114.11x.9x.160LB1內網機櫃交換IP:10.10.15.254LB2內網機櫃交換IP:10.10.11.254LB1:調度機IP(外網bond1):10.10.254.18/30##外網需要配置一個與核心交換機聯通的私有地址調度機IP(內網bond0):10.10.15.77內網分發私有網段:10.10.251.0/24##local address外網ospf轉發網段網關:10.10.254.17LB2:調度機IP(外網bond1):10.10.254.22/30#外網需要配置一個與核心交換機聯通的私有地址調度機IP(內網bond0):10.10.11.77內網分發私有網段:10.10.250.0/24##local address外網ospf轉發網段網關:10.10.254.21 |

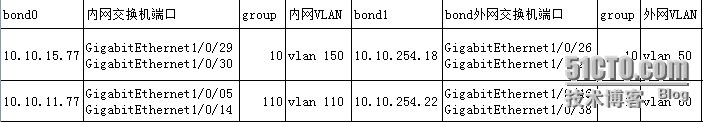

爲了提升網絡吞吐量,網絡冗餘,我們LB上網卡是做bond的,詳細說明如下圖:

三、具體部署

部署分三大部分,網卡綁定、ospf配置和lvs配置,下面依次介紹:

A、網卡綁定部分

1、服務器(LB1)上配置如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | [root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-bond0DEVICE=bond0ONBOOT=yesBOOTPROTO=staticIPADDR=10.10.11.77##LB2爲10.10.15.77NETMASK=255.255.255.0USERCTL=noTYPE=Ethernet[root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-bond1DEVICE=bond1ONBOOT=yesBOOTPROTO=staticIPADDR=10.10.254.22##LB2爲10.10.254.18NETMASK=255.255.255.252USERCTL=noTYPE=Ethernet[root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0DEVICE=eth0BOOTPROTO=noneONBOOT=yesUSERCTL=noMASTER=bond0SLAVE=yes[root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1DEVICE=eth1BOOTPROTO=noneONBOOT=yesUSERCTL=noMASTER=bond0SLAVE=yes[root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2DEVICE=eth2BOOTPROTO=noneONBOOT=yesUSERCTL=noMASTER=bond1SLAVE=yes[root@lvs_cluster_C1 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth3DEVICE=eth3BOOTPROTO=noneONBOOT=yesUSERCTL=noMASTER=bond1SLAVE=yes[root@lvs_cluster_C1 ~]# vi /etc/modprobe.d/openfwwf.confoptions b43 nohwcrypt=1qos=0alias bond0 bondingoptions bond0 miimon=100mode=0#bond的幾種模式的詳細說明我之前寫的博文中有介紹或者去百度、谷歌吧alias bond1 bondingoptions bond1 miimon=100mode=0alias net-pf-10off |

2、交換機上配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | bond0:10.10.15.77eth0-GigabitEthernet1/0/29eth1-GigabitEthernet1/0/30LB1對應的內網機櫃交換機操作:interfaceBridge-Aggregation10port access vlan 150interfaceGigabitEthernet1/0/29port link-aggregation group 10interfaceGigabitEthernet1/0/30port link-aggregation group 10bond1:10.10.254.18GigabitEthernet1/0/26GigabitEthernet1/0/28LB1對應的外網機櫃交換機操作:vlan 50interfaceBridge-Aggregation10port access vlan 50interfaceGigabitEthernet1/0/26port link-aggregation group 10interfaceGigabitEthernet1/0/28port link-aggregation group 10bond0:10.10.11.77eth0:GigabitEthernet1/0/14eth1:GigabitEthernet1/0/05LB2對應的內網機櫃交換機操作:interfaceBridge-Aggregation110port access vlan 110interfaceGigabitEthernet1/0/05port link-aggregation group 110interfaceGigabitEthernet1/0/14port link-aggregation group 110bond1:10.10.254.22eth2:GigabitEthernet1/0/38eth3:GigabitEthernet1/0/46LB2對應的外網機櫃交換機操作:vlan 60interfaceBridge-Aggregation110port access vlan 60interfaceGigabitEthernet1/0/38port link-aggregation group 110interfaceGigabitEthernet1/0/46port link-aggregation group 110display link-aggregation verbose #查看綁定狀態是否ok |

B、ospf配置部分

1、交換機上配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | 外網核心操作:vlan 50vlan 60interfaceVlan-interface50ip address 10.10.254.17255.255.255.252ospf timer hello 1ospf timer dead 4ospf dr-priority 96interfaceVlan-interface60ip address 10.10.254.21255.255.255.252ospf timer hello 1ospf timer dead 4ospf dr-priority 95#配置ospf的參數, timer hello是發送hello包的間隔,timer dead是存活的死亡時間。默認是10、40,hello包是ospf裏面維持鄰居關係的報文,這裏配置是每秒發送一個,當到4秒還沒有收到這個報文,就會認爲這個鄰居已經丟失,需要修改路由ospf 1area 0.0.0.0network 10.10.254.160.0.0.3network 10.10.254.200.0.0.3內網核心:interfaceVlan-interface110ip address 10.10.250.1255.255.255.0subinterfaceVlan-interface150ip address 10.10.251.1255.255.255.0sub |

2、服務器上配置

1 2 3 | mkdir/etc/quagga/mkdir-p /var/log/quagga/chmod-R 777 /var/log/quagga/ |

配置文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | cat /etc/quagga/zebra.confhostname lvs_cluster_C2 ##LB2爲:hostname lvs_cluster_C1cat /etc/quagga/ospfd.conflog file /var/log/quagga/ospfd.loglog stdoutlog sysloginterfacebond1ip ospf hello-interval 1ip ospf dead-interval 4router ospfospf router-id 10.10.254.17##LB2爲:10.10.254.21log-adjacency-changesauto-cost reference-bandwidth 1000network 114.11x.9x.0/24area 0.0.0.0network 10.10.254.16/30area 0.0.0.0##LB2爲:10.10.254.20/30 |

ospfd的啓動腳本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 | [root@lvs_cluster_C1 ~]# cat /etc/init.d/ospfd#!/bin/bash# chkconfig: - 16 84# config: /etc/quagga/ospfd.conf### BEGIN INIT INFO# Provides: ospfd# Short-Description: A OSPF v2 routing engine# Description: An OSPF v2 routing engine for use with Zebra### END INIT INFO# source function library. /etc/rc.d/init.d/functions# Get network config. /etc/sysconfig/network# quagga command line options. /etc/sysconfig/quaggaRETVAL=0PROG="ospfd"cmd=ospfdLOCK_FILE=/var/lock/subsys/ospfdCONF_FILE=/etc/quagga/ospfd.confcase"$1"instart)# Check that networking is up.[ "${NETWORKING}"= "no"] && exit1# The process must be configured first.[ -f $CONF_FILE ] || exit6if[ `id-u` -ne0 ]; thenecho$"Insufficient privilege"1>&2exit4fiecho-n $"Starting $PROG: "daemon $cmd -d $OSPFD_OPTSRETVAL=$?[ $RETVAL -eq0 ] && touch$LOCK_FILEecho;;stop)echo-n $"Shutting down $PROG: "killproc $cmdRETVAL=$?[ $RETVAL -eq0 ] && rm-f $LOCK_FILEecho;;restart|reload|force-reload)$0 stop$0 startRETVAL=$?;;condrestart|try-restart)if[ -f $LOCK_FILE ]; then$0 stop$0 startfiRETVAL=$?;;status)status $cmdRETVAL=$?;;*)echo$"Usage: $PROG {start|stop|restart|reload|force-reload|try-restart|status}"exit2esacexit$RETVAL |

zebra的啓動腳本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 | [root@lvs_cluster_C1 ~]# cat /etc/init.d/zebra#!/bin/bash# chkconfig: - 1585# config: /etc/quagga/zebra.conf### BEGIN INIT INFO# Provides: zebra# Short-Description: GNU Zebra routing manager# Description: GNU Zebra routing manager### END INIT INFO# source functionlibrary. /etc/rc.d/init.d/functions# quagga command line options. /etc/sysconfig/quaggaRETVAL=0PROG="zebra"cmd=zebraLOCK_FILE=/var/lock/subsys/zebraCONF_FILE=/etc/quagga/zebra.confcase"$1"instart)# Check that networking isup.[ "${NETWORKING}"= "no"] && exit 1# The process must be configured first.[ -f $CONF_FILE ] || exit 6if[ `id -u` -ne 0]; thenecho $"Insufficient privilege"1>&2exit 4fiecho -n $"Starting $PROG: "/sbin/ip route flush proto zebradaemon $cmd -d $ZEBRA_OPTSRETVAL=$?[ $RETVAL -eq 0] && touch $LOCK_FILEecho;;stop)echo -n $"Shutting down $PROG: "killproc $cmdRETVAL=$?[ $RETVAL -eq 0] && rm -f $LOCK_FILEecho;;restart|reload|force-reload)$0stop$0startRETVAL=$?;;condrestart|try-restart)if[ -f $LOCK_FILE ]; then$0stop$0startfiRETVAL=$?;;status)status $cmdRETVAL=$?;;*)echo $"Usage: $0 {start|stop|restart|reload|force-reload|try-restart|status}"exit 2esacexit $RETVAL |

quagga的配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | [root@lvs_cluster_C1 ~]# cat /etc/sysconfig/quagga## Default: Bind all daemon vtys to the loopback(s) only#QCONFDIR="/etc/quagga"BGPD_OPTS="-A 127.0.0.1 -f ${QCONFDIR}/bgpd.conf"OSPF6D_OPTS="-A ::1 -f ${QCONFDIR}/ospf6d.conf"OSPFD_OPTS="-A 127.0.0.1 -f ${QCONFDIR}/ospfd.conf"RIPD_OPTS="-A 127.0.0.1 -f ${QCONFDIR}/ripd.conf"RIPNGD_OPTS="-A ::1 -f ${QCONFDIR}/ripngd.conf"ZEBRA_OPTS="-A 127.0.0.1 -f ${QCONFDIR}/zebra.conf"ISISD_OPTS="-A ::1 -f ${QCONFDIR}/isisd.conf"# Watchquagga configuration (please check timer values before using):WATCH_OPTS=""WATCH_DAEMONS="zebra bgpd ospfd ospf6d ripd ripngd"# To enable restarts, uncomment thisline (but first be sure to edit# the WATCH_DAEMONS line to reflect the daemons you are actually using):#WATCH_OPTS="-Az -b_ -r/sbin/service_%s_restart -s/sbin/service_%s_start -k/sbin/service_%s_stop" |

3、服務啓動:

1 2 3 | /etc/init.d/zebra start && chkconfig zebra on/etc/init.d/ospfd start && chkconfig ospfd onPS:先啓動zebra再啓動ospf,不然LB會學習不到路由信息 |

C、lvs部署部分

1、安裝組件

1 2 3 4 | #安裝帶fullnat功能內核(淘寶已開源)rpm -ivh kernel-2.6.32-220.23.3.el6.x86_64.rpm kernel-firmware-2.6.32-220.23.3.el6.x86_64.rpm --force#安裝lvs-tools(ipvsadm,keepalived,quagga),這些工具都是依據新內核修改過的,所以不要用原生的rpm -ivh lvs-tools-1.0.0-77.el6.x86_64.rpm |

2、添加local_address網段

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | cat /opt/sbin/ipadd.sh#!/bin/basharg=$1dev=bond0network="10.10.251"##LB2 10.10.250seq="2 254"functionstart() {fori in`seq $seq`doip addr add $network.$i/32dev $devdone}functionstop() {fori in`seq $seq`doip addr del $network.$i/32dev $devdone}case"$arg"instart)start;;stop)stop;;restart)stopstart;;esacecho "/opt/sbin/ipadd.sh">> /etc/rc.local ##加入開機啓動 |

3、keepalived的配置文件

1>配置問的大概說明

1 2 3 4 5 6 7 8 9 | .├── gobal_module ##全局配置文件├── info.txt ##記錄集羣部署的業務信息├── keepalived.conf ##主配置文件├── kis_ops_test.conf ##業務配置文件├── local_address.conf ##local_address├── lvs_module ##所有業務的include配置└── realserver #rs目錄└── kis_ops_test_80.conf ##業務的realserver的配置文件 |

2>配置文件的內容說明

[root@lvs_cluster_C1 keepalived]# cat /etc/keepalived/gobal_module

1 2 3 4 5 6 7 8 9 10 | ! global configure fileglobal_defs {notification_email {}notification_email_from [email protected]smtp_server 127.0.0.1smtp_connect_timeout 30router_id LVS_CLUSTER} |

[root@lvs_cluster_C1 keepalived]# cat /etc/keepalived/local_address.conf

1 2 3 | local_address_group laddr_g1 {10.10.250.2-254##LB1 10.10.251.2-254} |

PS:local_address每個LB不能重複

[root@lvs_cluster_C1 keepalived]# cat /etc/keepalived/lvs_module

1 | include./kis_ops_test.conf #測試 |

[root@lvs_cluster_C1 keepalived]# cat /etc/keepalived/kis_ops_test.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | #把local_address include進來include./local_address.conf#VIP組,可以有多個vipvirtual_server_group kis_ops_test_80 {114.11x.9x.18580#kis_ops_test}virtual_server group kis_ops_test_80 {delay_loop 7lb_algo wrrlb_kind FNATprotocol TCPnat_mask 255.255.255.0persistence_timeout 0#回話保持機制,默認爲0syn_proxy ##開啓此參數可以有效防範SynFlood***laddr_group_name laddr_g1alpha #開啓alpha模式:啓動時默認rs是down的狀態,健康檢查通過後纔會添加到vs poolomega #開啓omega模式,清除rs時會執行相應的腳本(rs的notify_up,quorum_up)quorum 1#服務是否有效的閥值(正常工作rs的wight值)hysteresis 0#延遲係數跟quorum配合使用#高於或低於閥值時會執行以下腳本。quorum_up " ip addr add 114.11x.9x.185/32 dev lo ;"quorum_down " ip addr del 114.11x.9x.185/32 dev lo ;"include./realserver/kis_ops_test_80.conf} |

[root@lvs_cluster_C1 ~]# cat /etc/keepalived/realserver/kis_ops_test_80.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | #real_server 10.10.2.240 80 {# weight 1# inhibit_on_failure# TCP_CHECK {# connect_timeout 3# nb_get_retry 3 ##TCP_CHECK 方式此參數不生效# delay_before_retry 3 ##TCP_CHECK 方式此參數不生效# connect_port 80# }#}real_server 10.10.2.240 80 {weight 1inhibit_on_failureHTTP_GET {url {path /abcdigest 134b225d509b9c40647377063d211e75}connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 80}} |

到這裏LB上的配置基本上完成了,還有一點需要配置哈,那就是要在LB上配置路由策略,不然vip是ping不通的,但是不影響http訪問,具體配置如下:

1 2 3 4 | echo "from 114.11x.9x.0/24 table LVS_CLUSTER">/etc/sysconfig/network-scripts/rule-bond1echo "default table LVS_CLUSTER via 10.10.254.21 dev bond1">/etc/sysconfig/network-scripts/route-bond1 ##LB1 via 10.10.254.17echo "203 LVS_CLUSTER">> /etc/iproute2/rt_tables/etc/init.d/network restart ##重啓網絡 |

四、realserver的配置

1、realserver需要更換帶toa模塊的內核,如果不更改的話你的web服務(比如nginx)的日誌獲取不到用戶的真實IP,而是記錄了LB的local_address的IP

1 2 3 4 | centos5系列的系統:rpm -ivh kernel-2.6.18-274.18.2.el5.kis_toa.x86_64.rpmcentos6系列的系統:rpm -ivh kernel-toa-2.6.32-220.23.3.el6.kis_toa.x86_64.rpm |

2、realserver要能和LB的local_address互訪即可,這個要看大家的內網環境了。

五、LB的調優

1、網卡調優,這個很重要,如果不調優大流量下,cpu單核耗盡會把LB搞死的,本人親身體驗過,

具體看參考我之前的文章,高併發、大流量網卡調優

2、內核參數調優

1 2 3 4 5 6 7 8 | #該參數決定了,網絡設備接收數據包的速率比內核處理這些包的速率快時,允許送到隊列的數據包的最大數目net.core.netdev_max_backlog = 500000#開啓路由轉發功能net.ipv4.ip_forward = 1#修改文件描述符fs.nr_open = 5242880fs.file-max = 4194304sed -i 's/1024/4194304/g'/etc/security/limits.conf |

PS:目前集羣有這麼個問題,realserver不能訪問自己的vip,因爲有些業務確實有這樣的需要,最後想到以下解決辦法:

1 2 3 | 1、如果realserver上有公網IP且和vip是同一個段則需要加路由:route add -net vip netmask 255.255.255.255gw 114.11x.9x.12、如果realserver走的nat網關,而nat網關的出口是和vip同一個網段,那就需要在nat上加如上功能的路由即可 |