一、k8s插件介紹

二、dns插件安裝

三、dashboard安裝

四、metric-server安裝

一、k8s插件介紹

1、在kubernetes-server源碼包解壓完後

解壓目錄/kubernetes/cluster/addons/有提供各種插件的安裝方法

包括dns、dashboard、prometheus、cluster-monitoring、metric-server等等

2、舉例dashboard

解壓目錄/kubernetes/cluster/addons/dashboard

[root@master1 dashboard]# ll

total 32

-rw-rw-r-- 1 root root 264 Jun 5 02:15 dashboard-configmap.yaml

-rw-rw-r-- 1 root root 1822 Jun 5 02:15 dashboard-controller.yaml

-rw-rw-r-- 1 root root 1353 Jun 5 02:15 dashboard-rbac.yaml

-rw-rw-r-- 1 root root 551 Jun 5 02:15 dashboard-secret.yaml

-rw-rw-r-- 1 root root 322 Jun 5 02:15 dashboard-service.yaml

-rw-rw-r-- 1 root root 242 Jun 5 02:15 MAINTAINERS.md

-rw-rw-r-- 1 root root 176 Jun 5 02:15 OWNERS

-rw-rw-r-- 1 root root 400 Jun 5 02:15 README.md基本默認的yaml就可以滿足需求,yaml中涉及的image可以從外部下載導入到本地倉庫,並修改爲內網環境即可

二、dns插件安裝

1、配置文件coredns.yaml

[root@master1 coredns]# cat coredns.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

beta.kubernetes.io/os: linux

containers:

- name: coredns

image: k8s.gcr.io/coredns:1.3.1

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.244.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

[root@master1 coredns]# sed -i -e s/__PILLAR__DNS__DOMAIN__/cluster.local/ -e s/__PILLAR__DNS__SERVER__/10.244.0.2/ coredns.yaml

[root@master1 coredns]# sed -i '[email protected]/coredns:[email protected]:888/coredns:1.3.1@' coredns.yaml2、安裝和說明

[root@master1 coredns]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created 信息說明:

ClusterRole:名稱[system:coredns]必要權限

ServiceAccount: 創建一個serviceaccount名稱[coredns] 給pod使用,k8s會給該serviceaccount創建一個token

ConfigMap:名稱[coredns]dns配置文件

Deployment:部署coredns容器,掛載了configmap[coredns],使用的serviceaccount爲coredns

Service:名稱kube-dns和Deployment的pod建立關聯關係

ClusterRoleBinding[system:coredns]=Role[system:coredns]+ServiceAccount[coredns] 建立綁定關係,pod就有了這個Role的權限

3、驗證

[root@master1 coredns]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-5497cfc9bb-jbvn2 1/1 Running 0 18s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.244.0.2 <none> 53/UDP,53/TCP,9153/TCP 18s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 1/1 1 1 18s

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-5497cfc9bb 1 1 1 18s重新部署一個應用發現:

[root@master1 yaml]# kubectl get pods

NAME READY STATUS RESTARTS AGE

base-7d77d4cc9d-9sbdn 1/1 Running 0 118s

base-7d77d4cc9d-9vx6n 1/1 Running 0 118s

base-7d77d4cc9d-ctwjt 1/1 Running 0 118s

base-7d77d4cc9d-kmfbf 1/1 Running 0 118s

base-7d77d4cc9d-wfbhh 1/1 Running 0 118s

[root@master1 yaml]# kubectl exec -it base-7d77d4cc9d-9sbdn -- /bin/bash

[root@base-7d77d4cc9d-9sbdn /home/admin]

#cat /etc/resolv.conf

nameserver 10.244.0.2

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5測試域名:$服務名稱.$名稱空間.svc.cluster.local

[root@base-7d77d4cc9d-9sbdn /home/admin] ping kube-dns.kube-system.svc.cluster.local

[root@base-7d77d4cc9d-9sbdn /home/admin] ping kubernetes.default.svc.cluster.local三、dashboard安裝

1、源碼解壓後目錄

解壓目錄/kubernetes/cluster/addons/dashboard

[root@master1 dashboard]# ll

-rw-rw-r-- 1 root root 264 Jun 5 02:15 dashboard-configmap.yaml

-rw-rw-r-- 1 root root 1831 Jul 29 23:58 dashboard-controller.yaml

-rw-rw-r-- 1 root root 1353 Jun 5 02:15 dashboard-rbac.yaml

-rw-rw-r-- 1 root root 551 Jun 5 02:15 dashboard-secret.yaml

-rw-rw-r-- 1 root root 322 Jun 5 02:15 dashboard-service.yaml

-rw-rw-r-- 1 root root 242 Jun 5 02:15 MAINTAINERS.md

-rw-rw-r-- 1 root root 176 Jun 5 02:15 OWNERS

-rw-rw-r-- 1 root root 400 Jun 5 02:15 README.md

[root@master1 dashboard]# sed -i '[email protected]/kubernetes-dashboard-amd64:[email protected]:888/kubernetes-dashboard-amd64:v1.10.1@' ./dashboard-controller.yaml 1.1 dashboard-server.yaml

[root@master1 dashboard]# cat dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

type: NodePort

備註:Service修改type爲NodePort1.2 dashboard-configmap.yaml

[root@master1 dashboard]# cat dashboard-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-settings

namespace: kube-system1.3 dashboard-controller.yaml

[root@master1 dashboard]# cat dashboard-controller.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: 192.168.192.234:888/kubernetes-dashboard-amd64:v1.10.1

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"1.4 dashboard-rbac.yaml

[root@master1 dashboard]# cat dashboard-rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system1.5 dashboard-secret.yaml

[root@master1 dashboard]# cat dashboard-secret.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque1.6 信息說明

ConfigMap[kubernetes-dashboard]= 爲空

Secret[kubernetes-dashboard-key-holder] 和 Secret[kubernetes-dashboard-certs]

RoleBinding[kubernetes-dashboard-minimal]=Role[kubernetes-dashboard-minimal]+ServiceAccount[ kubernetes-dashboard]

Deployment[kubernetes-dashboard] #auto-generate-certificates自動產生證書,掛載secret [kubernetes-dashboard-certs]和sa: kubernetes-dashboard

Service: kubernetes-dashboard 關聯後端pod 2、安裝和說明

[root@master1 dashboard]# kubectl apply -f ./

configmap/kubernetes-dashboard-settings created

serviceaccount/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-key-holder created

service/kubernetes-dashboard created3、驗證

3.1 查看分配的nodePort

[root@master1 dashboard]# kubectl get svc kubernetes-dashboard -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.244.245.24 <none> 443:32230/TCP 4m42s由於是內網,這裏做了四層代理,代理到集羣物理機的32230端口

[root@master1 ~]# kubectl cluster-info

Kubernetes master is running at https://127.0.0.1:8443

CoreDNS is running at https://127.0.0.1:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

kubernetes-dashboard is running at https://127.0.0.1:8443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy查看dashboard命令參數

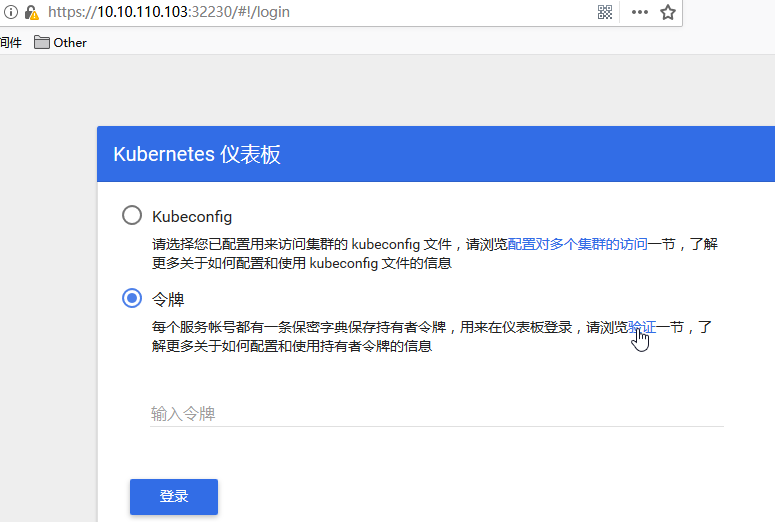

[root@master1 ~]# kubectl exec -it kubernetes-dashboard-fcdb97778-w2gxn -n kube-system -- /dashboard --help3.2 token登陸訪問

訪問dashboard: https://10.10.110.103:32230 //代理地址爲10.10.110.103

dashboard默認不支持證書,

創建token登陸

[root@master1 ~]# kubectl create sa dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master1 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@master1 ~]# ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}')

[root@master1 ~]# DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

[root@master1 ~]# echo ${DASHBOARD_LOGIN_TOKEN}使用該token登陸

3.3 創建使用token的kubeconfig

[root@master1 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/cert/ca.pem --embed-certs=true --server=https://127.0.0.1:8443 --kubeconfig=dashboard.kubeconfig

[root@master1 ~]# kubectl config set-credentials dashboard_user --token=${DASHBOARD_LOGIN_TOKEN} --kubeconfig=dashboard.kubeconfig

[root@master1 ~]# kubectl config set-context default --cluster=kubernetes --user=dashboard_user --kubeconfig=dashboard.kubeconfig

[root@master1 ~]# kubectl config use-context default --kubeconfig=dashboard.kubeconfig四、metric-server安裝

採集:cAdvisor, Heapster, collectd, Statsd, Tcollector, Scout

存儲:InfluxDb, OpenTSDB, Elasticsearch

展示:Graphite, Grafana, facette, Cacti, Ganglia, DataDog

告警:Nagios, prometheus, Icinga, Zabbix

Heapster:在k8s集羣中獲取metrics和事件數據,寫入InfluxDB,heapster收集的數據比cadvisor多卻全,而且存儲在influxdb的也少。Heapster是一個收集者,將每個Node上的cAdvisor的數據進行彙總,然後導到InfluxDB。Heapster的前提是使用cAdvisor採集每個node上主機和容器資源的使用情況

InfluxDB :時序數據庫,提供數據的存儲,存儲在指定的目錄下

Cadvisor:將數據,寫入InfluxDB

Grafana :提供了WEB控制檯,自定義查詢指標,從InfluxDB查詢數據,並展示

Prometheus: 支持在容器,node,k8s集羣等本身監控

注:Heapster在V1.12之後有了新的替代品metric-server

1、修改配置文件

下載配置文件

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/aggregated-metrics-reader.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/auth-delegator.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/auth-reader.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/metrics-apiservice.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/metrics-server-deployment.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/metrics-server-service.yaml

https://raw.githubusercontent.com/kubernetes-incubator/metrics-server/master/deploy/1.8%2B/resource-reader.yaml或者clone:# git clone https://github.com/kubernetes-incubator/metrics-server.git

[root@master1 metric]# cd metrics-server/deploy/1.8+/

1.1 aggregated-metrics-reader.yaml

[root@master1 1.8+]# cat aggregated-metrics-reader.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods"]

verbs: ["get", "list", "watch"]1.2 auth-delegator.yaml

[root@master1 1.8+]# cat auth-delegator.yaml

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system1.3 auth-reader.yaml

[root@master1 1.8+]# cat auth-reader.yaml

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system1.4 metrics-apiservice.yaml

[root@master1 1.8+]# cat metrics-apiservice.yaml

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 1001.5 metrics-server-deployment.yaml

[root@master1 1.8+]# cat metrics-server-deployment.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: 192.168.192.234:888/metrics-server-amd64:v0.3.1

args:

- --metric-resolution=30s

#- --kubelet-port=10250

#- --deprecated-kubelet-completely-insecure=true

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

mountPath: /tmp1.6 metrics-server-service.yaml

[root@master1 1.8+]# cat metrics-server-service.yaml

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 443

nodePort: 30968

type: NodePort1.7 resource-reader.yaml

[root@master1 1.8+]# cat resource-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system2、安裝

[root@master1 metrics-server]# kubectl apply -f .3、驗證和測試

查看運行情況

[root@master1 metrics-server]# kubectl get pods -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-v0.3.1-648c64499d-94x7l 1/1 Running 0 2m11s

[root@master1 metrics-server]# kubectl get svc -n kube-system -l kubernetes.io/name=Metrics-server

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

metrics-server ClusterIP 10.244.9.135 <none> 443/TCP 3m25s3.2 查看metric內容

[root@master1 yaml]# curl -sSL --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/cert/admin.pem --key /opt/k8s/work/cert/admin-key.pem https://127.0.0.1:8443/apis/metrics.k8s.io/v1beta1/nodes/ | jq .

[root@master1 yaml]# curl -sSL --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/cert/admin.pem --key /opt/k8s/work/cert/admin-key.pem https://127.0.0.1:8443/apis/metrics.k8s.io/v1beta1/pods/ | jq .

[root@master1 ~]# kubectl get --raw "/apis/metrics.k8s.io/v1beta1" | jq .

[root@master1 ~]# kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes" | jq .

[root@master1 ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master1 110m 2% 832Mi 10%

master2 104m 2% 4406Mi 57%

master3 111m 2% 4297Mi 55% 參考文檔:

https://github.com/kubernetes-incubator/metrics-server/issues/40

https://kubernetes.io/docs/tasks/debug-application-cluster/core-metrics-pipeline/

https://github.com/kubernetes-incubator/metrics-server/issues/25