我這裏準備了三臺虛擬機:master(192.168.33.52)、node1(192.168.33.35)和node2(192.168.33.50)

kubernetes 集羣部署有兩種方式:

第一種方式:較難,配置的信息較多(不建議)

第二種方式:使用kubeadm 來簡化部署

kubeadm安裝步驟:

1.master,nodes:安裝kubelet、kubeadm、docker、kunbectl(命令行客戶端)

2.master(主節點) :kubeadm init

3. nodes(子節點): kubeadm join

接下來就是安裝了,安裝之前,一定要關閉iptables,Firewalls服務,應爲k8s會大量用到這些。

第一步:安裝

先去阿里雲鏡像網站下載

進入到centos系統 cd /etc/yum.repos.d 下 創建文件 vim Kubernetes.repo 在裏面添加如下內容

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

最後執行yum repolist ,檢查是否可以安裝程序。

沒問題就把文件複製到其他node節點機器上面對應的路徑下。

master主機安裝:

yum install kubeadm kubectl kubelet docker 安裝這些軟件,注意安裝過程中,可能出現錯誤,如下圖:

出現這種情況,先把Kubernetes.repo這個公鑰改爲gpgcheck=0 不讓檢查了,直接下載wget 這兩個文件

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

通過手動導入 rpm --import yum-key.gpg 和 rpm --import yum-key.gpg ,最後再次安裝上面的軟件就可以了。

以上所有的準備的軟件已經安裝完畢!(在master主機上面安裝的)

接下來啓動 kubeadm服務,出現錯誤如下:

需要在kubelet中的配置中添加參數:

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

啓動前把相關配置設置爲開機自動啓動:systemctl enable kubelet 、systemctl enable docker

啓動kubeadm命令:kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

修改網絡配置:

echo "1" >/proc/sys/net/ipv4/ip_forward

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" >/proc/sys/net/bridge/bridge-nf-call-ip6tables

或者直接編輯文件 vim /etc/sysctl.conf加入下面兩行配置即可,就不用每次修改iptables了

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

由於kubeadm 鏡像的網站 k8s.gr.io國外的,需要翻牆才能下載,國內的可以通過如下網站來下載相關的鏡像

https://hub.docker.com/r/mirrorgooglecontainers/

kubeadm config images 查詢需要哪些鏡像文件

k8s.gcr.io/kube-apiserver:v1.15.0

k8s.gcr.io/kube-controller-manager:v1.15.0

k8s.gcr.io/kube-scheduler:v1.15.0

k8s.gcr.io/kube-proxy:v1.15.0

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.15.0

docker pull mirrorgooglecontainers/kube-controller-manager:v1.15.0

docker pull mirrorgooglecontainers/kube-scheduler:v1.15.0

docker pull mirrorgooglecontainers/kube-proxy:v1.15.0

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.3.10

docker pull coredns/coredns:1.3.1

//用來提供網絡,跟coredns一樣,這裏使用flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

docker pull mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0 (儀表盤,用來顯示圖形監控docker集羣的)

最後修改鏡像名爲:k8s.gcr.io/kube-apiserver:v1.15.0 這種類型的

docker tag mirrorgooglecontainers/kube-apiserver-amd64:v1.15.0 k8s.gcr.io/kube-apiserver:v1.15.0

docker tag mirrorgooglecontainers/kube-controller-manager:v1.15.0 k8s.gcr.io/kube-controller-manager:v1.15.0

docker tag mirrorgooglecontainers/kube-scheduler:v1.15.0 k8s.gcr.io/kube-scheduler:v1.15.0

docker tag mirrorgooglecontainers/kube-proxy:v1.15.0 k8s.gcr.io/kube-proxy:v1.15.0

docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag mirrorgooglecontainers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

docker tag mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0

k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

下面是docker鏡像註冊中心:

{

"registry-mirrors": ["https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","docker.io/mirrorgooglecontainers"]

}

接下來運行kubeadm :

//這是我運行成功的信息

[root@jason ~]# kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.15.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [jason.com localhost] and IPs [192.168.33.54 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [jason.com localhost] and IPs [192.168.33.54 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [jason.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.33.54]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 47.021223 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node jason.com as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node jason.com as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: p0uxl7.lx0szgjzt4i2y7cg

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

//這兩個命令必須執行,不然kubectl get nodes 命令執行不了

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

//這裏就是可以直接把其他子節點加入到這個k8s集羣中

kubeadm join 192.168.33.54:6443 --token p0uxl7.lx0szgjzt4i2y7cg \

--discovery-token-ca-cert-hash sha256:b03a31835f36e278a0649bd0201fe6633f5e8b8d126879256b5d38b382444a1f

/**創建集羣時成功後面會出現這段命令,意思就是生成了admin.config的rbac角色配置文件,只有這個角色纔可以訪問集羣中的nodes,pods等等,應爲k8s

做了認證授權這塊功能,如果想在其他節點中查看節點信息或者pod信息,必須要把這個主節點master生成的配置文件拷貝到子節點中去,纔可以訪問**/

注意:如果子節點加入到集羣中的token失效,或者不記得,可以通過以下命令獲取新的token

kubeadm token create --print-join-command

遇到的坑:

第一種:

[root@node1 ~]# kubeadm join 192.168.0.104:6443 --token tti5k7.t0unuw5g6hv9suk7 --discovery-token-ca-cert-hash sha256:314ef877e9c5c0a9287785a58cfb14e7d0d464681ddd73f344cd35a0f3378c91 --ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

針對這種問題,我直接rubeadm reset 重置,再去執行join如命令。。。

第二種:發現hostname could not be reached 。

[root@node1 ~]# kubeadm join 192.168.0.104:6443 --token tti5k7.t0unuw5g6hv9suk7 --discovery-token-ca-cert-hash sha256:314ef877e9c5c0a9287785a58cfb14e7d0d464681ddd73f344cd35a0f3378c91 --ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "node1" could not be reached

[WARNING Hostname]: hostname "node1": lookup node1 on 116.116.116.116:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

解決問題:研究半天,想了下,因爲的我的centos7都是克隆的所以他主機名稱都一樣,所以通過kubectl get nodes 始終顯示不出來我加入的子節點,後來修改了/etc/hostname 重新啓動系統,再重新加入即可。

去master執行

[root@CentOS7 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

centos7 Ready master 47m v1.15.0

node1 Ready <none> 9m19s v1.15.0

[root@CentOS7 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-5c98db65d4-5p5h2 1/1 Running 0 47m 10.244.0.7 centos7 <none> <none>

coredns-5c98db65d4-9t6nk 1/1 Running 0 47m 10.244.0.6 centos7 <none> <none>

etcd-centos7 1/1 Running 0 46m 192.168.0.104 centos7 <none> <none>

kube-apiserver-centos7 1/1 Running 0 46m 192.168.0.104 centos7 <none> <none>

kube-controller-manager-centos7 1/1 Running 0 46m 192.168.0.104 centos7 <none> <none>

kube-flannel-ds-amd64-8zdl9 1/1 Running 0 9m26s 192.168.0.105 node1 <none> <none>

kube-flannel-ds-amd64-dkwbd 1/1 Running 0 43m 192.168.0.104 centos7 <none> <none>

kube-proxy-7q5gx 1/1 Running 0 9m26s 192.168.0.105 node1 <none> <none>

kube-proxy-k6n4g 1/1 Running 0 47m 192.168.0.104 centos7 <none> <none>

kube-scheduler-centos7 1/1 Running 0 46m 192.168.0.104 centos7 <none> <none>

第三種:

[root@node2 ~]# systemctl start kubelet

[root@node2 ~]# kubeadm join 192.168.0.104:6443 --token jmmssi.htlyivb16smw9s0h \

> --discovery-token-ca-cert-hash sha256:a0c394447b9a40dd2e4e1f59794d9f0847436637aacc33e300cd66564f01872e --ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Swap]: running with swap on is not supported. Please disable swap

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR FileAvailable--etc-kubernetes-bootstrap-kubelet.conf]: /etc/kubernetes/bootstrap-kubelet.conf already exists

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@node2 ~]#

[root@node2 ~]# rm -rf /etc/kubernetes/pki/ca.crt

[root@node2 ~]# rm -rf /etc/kubernetes/pki/ca.crt

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]# rm -rf /etc/kubernetes/bootstrap-kubelet.conf

[root@node2 ~]# rm -rf /etc/kubernetes/kubelet.conf

經常會加入說存在一些證書,配置,直接刪除掉即可。

第四種:

[root@CentOS7 ~]# kubectl get nodes

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error" while trying to verify candidate authority certificate "kubernetes")

出現上述問題,說明已經啓動了k8s,但是沒有複製執行的命令行如下:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config //admin.conf爲主節點上面的配置文件、在/etc/kubernetes目錄下

執行了,纔可以使用kubectl get nodes

第五種:在子節點中執行加入kubeadm join...報如下錯誤

[root@node2 ~]# kubeadm join 192.168.0.104:6443 --token jmmssi.htlyivb16smw9s0h --discovery-token-ca-cert-hash sha256:a0c394447b9a40dd2e4e1f59794d9f0847436637aacc33e300cd66564f01872e --ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get http://localhost:10248/healthz: dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get http://localhost:10248/healthz: dial tcp [::1]:10248: connect: connection refused.

解決方案:需要把主節點中的 admin.config文件拷貝到node自己點中存放起來,最後執行如下命令

[root@node2 system]# mkdir -p $HOME/.kube

[root@node2 system]# cp -i admin.conf $HOME/.kube/config

再去kubeadm join...就可以了

第六種:kubectl join 過後 節點一直處於 沒有連接狀態:

node1 NotReady <none> 24m v1.15.0

查詢發現安裝的flannle失敗了,沒有安裝上網卡信息 ifconfig看不到有flannel信息

解決:

mkdir -p /etc/cni/net.d/

cat <<EOF> /etc/cni/net.d/10-flannel.conf

{"name":"cbr0","type":"flannel","delegate": {"isDefaultGateway": true}}

EOF

mkdir /usr/share/oci-umount/oci-umount.d -p

mkdir /run/flannel/

cat <<EOF> /run/flannel/subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.1.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

EOF

//這裏可以不執行,如果實在不行,可以在執行

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube-flannel.yml

第七種:如果經常服務器關機,有重新啓動,join 加入節點 出現如下錯誤 10250端口被佔用,重新啓動下

[root@node1 ~]# kubeadm join 192.168.33.52:6443 --token d0brol.m39hv6l241gzha1i --discovery-token-ca-cert-hash sha256:65f82496a3196b33b02849526ae3607a0a0fda564ab44fe7ad575e60d7c60c77

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR FileAvailable--etc-kubernetes-bootstrap-kubelet.conf]: /etc/kubernetes/bootstrap-kubelet.conf already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

[root@node1 ~]# kubeadm reset

第二部:k8s中的kubectl命令學習

//在k8s節點上面運行應用程序, 端口爲80,至少數量爲1

kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1

//查看運行的應用相關信息

kubectl get deployment

//查看運行的應用程序信息,跟上面差不多

kubectl get pod/pods

//這個命令查看運行的應用更多相關信息,包括ip,運行在哪個節點上面(常用)

kubectl get pods -o wide

//這裏是顯示節點中的標籤信息

[root@master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

client 0/1 Error 0 28m run=client

nginx-deploy-7689897d8d-74lmm 1/1 Running 0 16m pod-template-hash=7689897d8d,run=nginx-deploy

//刪除運行的應用,不過這裏刪除了,k8s控制器會自動創建運行一個新的nginx應用

kubectl delete pod nginx-deploy-7689897d8d-snfmk

//所以這裏有個問題,如果pod中的應用程序被刪除了,控制器會運行新的應用,那對應的ip地址會發生變化,

//這時候我們經常不知道ip地址,所以沒辦法訪問,所以要創建一個暴露的服務來映射pod中的應用ip地址

//直接訪問暴露的服務ip地址就可以了,不管以後pod是否變化,都會映射對應的端口

//應爲他們之間只通過標籤名稱來關聯的

[root@master home]# kubectl expose deployment nginx-deploy --name=nginx --port=80 --target-port=80 --protocol=TCP

//說明暴露服務以及成功

service/nginx exposed

//查看暴露的服務運行情況

[root@master home]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 110m

nginx ClusterIP 10.110.244.67 <none> 80/TCP 2m30s

//查看這些暴露的服務關聯對應的pod節點中的相關信息,通過labels標籤綁定對應的pod應用

[root@master home]# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: run=nginx-deploy

Annotations: <none>

Selector: run=nginx-deploy

Type: ClusterIP

IP: 10.110.244.67

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.6:80

Session Affinity: None

Events: <none>

//這個事查看pod上面創建的應用程序詳情,類似docker中的 docker inspect 容器(這個常用)

[root@master home]# kubectl describe pod nginx-deploy

//這個命令是給pod中的應用擴展數量的

[root@master ~]# kubectl scale --replicas=2 Deployment nginx-deploy

deployment.extensions/nginx-deploy scaled

//這個事升級k8s中pod節點中應用程序版本,它會自動一個個的去升級

[root@master home]# kubectl set image deployment nginx-deploy nginx-deploy=nginx:1.15-alpine

//deployment.extensions/nginx-deploy image updated

//這個是回滾狀態

[root@master home]# kubectl rollout status deployment nginx-deploy

deployment "nginx-deploy" successfully rolled out

//我創建了兩個nginx,k8s會自動創建在不同的節點上面,服務會綁定多個節點中的應用,看下描述的ip地址就可以知道了

[root@master home]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 7h45m v1.15.0 192.168.0.104 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://1.13.1

node1 Ready <none> 7h39m v1.15.0 192.168.0.106 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://18.9.6

node2 Ready <none> 28m v1.15.0 192.168.0.120 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://18.9.7

[root@master home]# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: run=nginx-deploy

Annotations: <none>

Selector: run=nginx-deploy

Type: NodePort

IP: 10.99.105.250

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 32047/TCP

Endpoints: 10.244.1.8:80,10.244.2.3:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

如果外網可以訪問k8s集羣中的節點應用可以如下設置

##修改裏面的type:ClusterIP 改爲 type:NodePort,即節點端口,意思是節點對外暴露的端口號

[root@master home]# kubectl edit svc nginx

##再去查看會發現有對應的80:32047/TCP 映射地址,

[root@master home]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h22m

nginx NodePort 10.99.105.250 <none> 80:32047/TCP 43m

#最後就可以通過外網訪問不同節點應用了:http://192.168.0.106:32047/,http://192.168.0.120:32047/

第三部:k8s認證及serviceaccount(創建jason賬戶)

//每個pod都有對應的服務,這個服務主要用來於apiserver鏈接認證使用的,

//可以在yaml資源清單配置中指定,服務賬號我們也可以自己創建

kubectl create serviceaccount mysa -o yaml ,

查看sa賬號:kubectl get sa

//查看當前k8s配置信息,包括clusters(集羣信息)、contexts(上下文,哪個集羣被哪個用戶使用)、users:有哪些用戶

[root@master pki]# kubectl config view

apiVersion: v1

clusters://集羣信息,有多個集羣信息

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.0.104:6443

name: kubernetes

contexts:

- context: //指定哪個集羣被哪個用戶訪問

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users://用戶信息,指定有多個用戶信息在這個配置文件中

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

//現在我們自己創建一個認證的證書,通過ca自帶的證書籤名創建,到/etc/kubernetes/pki目錄下

1. (umask 077;openssl genrsa -out jason.key 2048)//創建jason.key

2.openssl req -new -key jason.key -out jason.csr -subj "/CN=jason"//創建用戶名jason的jason.csr認證

3.openssl x509 -req -in jason.csr -CA ca.crt -CAkey ca.key -out jason.crt -CAcreateserial -days 366 //通過ca證書來簽名生成jason.crt證書,期限366天

//jason證書已經創建成功。

//我們可以通過命令:openssl x509 -in jason.crt -text -noout 查看輸出內容

//接下來我們把創建的jason證書添加到配置config文件的users中去

kubectl config set-credentials jason --client-certificate=jason.crt --client-key=jason.key --embed-certs=true

/****設置配置文件關聯的賬戶和集羣信息****/

kubectl config set-context kubernetes-jason@kubernetes --cluster=kubernetes --user=jason

/**********最後我們切換到剛剛創建的jason用戶上去************/

kubectl config use-context kubernetes-jason@kubernetes

//去訪問節點信息

kubectl get pods

出現如下錯誤:error from server (Forbidden): pods is forbidden: User "jason" cannot list resource "pods" in API group "" in the namespace "default" 說明創建jason認證沒有權限的

//下面是設置成功的配置信息 查看命令: kubectl config view /

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.0.104:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

- context:

cluster: kubernetes

user: jason

name: kubernetes-jason@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: jason

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@master .kube]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.0.104:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

- context: //這是新添加上下文,關聯kubernetes名稱

cluster: kubernetes

user: jason

name: kubernetes-jason@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: jason //這是新添加的用戶

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

第四部:k8s中的RBAC授權

第一種:RBAC-->(role、rolebinding)

//把第三步創建的jason賬號,通過rolebinding來綁定到rbac創建的角色role中去,使jason賬號擁有讀取節點的權限

1.先創建一個role的yaml文件,在清單文件夾下面創建。

[root@master mainfests]# kubectl create role pod-reader --verb=get,list,watch --resource=pods,pods/status --dry-run -o yaml > role-demo.yaml

2.應用剛剛創建的role-demo.yaml文件

[root@master mainfests]# kubectl create -f role-demo.yaml

3.可以查看剛剛創建的role或描述

[root@master mainfests]# kubectl get role

NAME AGE

pod-reader 12s

[root@master mainfests]# kubectl describe role pod-reader

4.通過rolebinding來綁定jason用戶,先創建綁定文件,再去應用

[root@master mainfests]# kubectl create rolebinding jason-read-pods --role=pod-reader --user=jason --dry-run -o yaml > rolebingding-demo.yaml

[root@master mainfests]# kubectl apply -f rolebingding-demo.yaml

5.最後切換jason戶,查詢節點信息。

kubectl config use-context kubernetes-jason@kubernetes

第二種:RBAC-->(clusterrole、clusterrolebinding)

第五步:部署k8s dashboard web端管理界面

文章目錄

第一步:安裝

這裏我是下載:wegt https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

修改裏面的內容,暴露一個端口出來

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

nodePort: 30000

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

總是出現pod在ImagePullBackOff狀態,發現原來這個儀表盤安裝到了node上面了,node上面沒有對應的鏡像文件。

首先我是通過yaml來安裝的,這個yaml需要下載儀表板文件到docker中去的,但是儀表板是國外的網站不能能訪問,

所以我修改了yaml文件,在容器下面,加了:imagePullPolicy: IfNotPresent(意思:如果鏡像拉不下來,就可以用本地的來加載)。

結果安裝成功,卻始終運行失敗,最後發現安裝在節點上了,所以需要在節點中下載儀表板文件。

docker tag docker.io/mirrorgooglecontainers/kubernetes-dashboard-amd64 k8s-dashboard

第二部:訪問

kubernetes-dashboard 部署完後,在google瀏覽器上面訪問,居然打不開,以下命令是解決訪問問題的。

mkdir key && cd key

#生成證書

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=192.168.0.106'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt -CAcreateserial -days 366

#刪除原有的證書secret

kubectl delete secret kubernetes-dashboard-certs -n kube-system

#創建新的證書secret

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kube-system

#查看pod

kubectl get pod -n kube-system

#重啓pod

kubectl delete pod <pod name> -n kube-system // <pod name>節點上面跑的kubernetes-dashboard應用

1 token令牌認證登錄(常用)

(1)創建serviceaccount

[root@master1 pki]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master1 pki]# kubectl get sa -n kube-system

NAME SECRETS AGE

......

dashboard-admin 1 13s

......

(2)把serviceaccount綁定在clusteradmin,授權serviceaccount用戶具有整個集羣的訪問管理權限

[root@master1 pki]# kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-cluster-admin created

(3)獲取serviceaccount的secret信息,可得到token(令牌)的信息

[root@master1 pki]# kubectl get secret -n kube-system

NAME TYPE DATA

......

daemon-set-controller-token-t4jhj kubernetes.io/service-account-token 3

......

[root@master1 pki]# kubectl describe secret dashboard-admin-token-lg48q -n kube-system

Name: dashboard-admin-token-lg48q

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 3cf69e4e-2458-11e9-81cc-000c291e37c2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbGc0OHEiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiM2NmNjllNGUtMjQ1OC0xMWU5LTgxY2MtMDAwYzI5MWUzN2MyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.gMdqXvyP3ClIL0eo8061UnK8QbEgdAeVZV92GMxJlxhs8aK8c278e8yNWzx68LvySg1ciXDI7Pqlo9caUL2K8tC2BRvLvarbgvhPnFlRvYrm6bO1PdD2XSg60JTkPxX_AXRrQG2kAAf3C3cbTgKEPvoX5fwvXgGLWsJ1rX0vStSBCsLlSJkTmoDp9rdYD1AU-32lN1eNfFueIIY8tIpeP7_eYdfvwSXnsbqXxr9K7zD6Zu7QM1T1tG0X0-D0MHKNDGP_YQ7S2ANo3FDd7OUiitGQRA1H7cO_LF7M_BKtzotBVCEbOGjNmnaVuL4y5XXvP0JHtlQxpnBzAOU9V9-tRw

(4)通過patch暴露端口(這步可以設置type:NodePort 就可以了)

[root@master1 pki]# kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kube-system

service/kubernetes-dashboard patched

[root@master1 pki]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 49d

kubernetes-dashboard NodePort 10.99.54.66 <none> 443:32639/TCP 10m

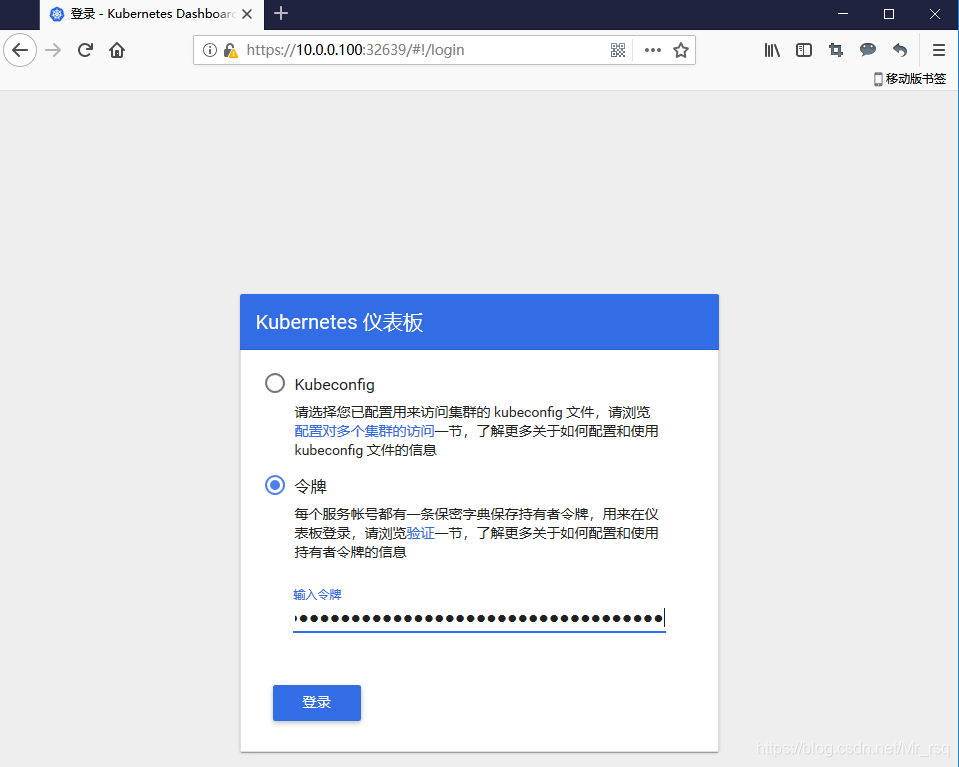

(5)瀏覽器訪問登錄,把token粘貼進去登錄即可

2 kubeconfig配置文件登錄

創建一個只能對default名稱空間有權限的serviceaccount,這個token文件會變化的,如果機器重啓的會變,所以要查詢它

[root@master1 pki]# kubectl create serviceaccount def-ns-admin -n default

serviceaccount/def-ns-admin created

[root@master1 pki]# kubectl create rolebinding def-ns-admin --clusterrole=admin --serviceaccount=default:def-ns-admin

rolebinding.rbac.authorization.k8s.io/def-ns-admin created

[root@master1 pki]# kubectl get secret

NAME TYPE DATA AGE

admin-token-bwrbg kubernetes.io/service-account-token 3 5d1h

def-ns-admin-token-xdvx5 kubernetes.io/service-account-token 3 2m9s

default-token-87nlt kubernetes.io/service-account-token 3 49d

tomcat-ingress-secret kubernetes.io/tls 2 21d

[root@master1 pki]# kubectl describe secret def-ns-admin-token-xdvx5

Name: def-ns-admin-token-xdvx5

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: def-ns-admin

kubernetes.io/service-account.uid: 928bbca1-245c-11e9-81cc-000c291e37c2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi14ZHZ4NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5MjhiYmNhMS0yNDVjLTExZTktODFjYy0wMDBjMjkxZTM3YzIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.EzUF13MElI8b-kuQNh_u1hGQpxgoffm4LdTVoeORKUBTADwqHEtW2arj76oZuI-wQyy5P0v5VvOoefr6h3NpIgbAze8Lqyrpg9wO0Crfi30IE1kZ2wUPYU9P_5inMxmCPLttppyPyc8mQKDkOOB1xFUmEebC63my-dG4CZljsd8zwNU6eXnhaThSUUn12UTvRsbSBLD-dvau1OY6YgDL6mgFl3cVqzCPd7ELpEyNYWCh3x5rcRfCcjcHGfUOrWjDzhgmH6sUiWb4gMHvSKgp-35rj5LXERfebse3OxSAXODJw9FhSn15VCmYcDmCJzMN83emFBwn0Y7bb11Y6M8CrQ

這種情況下的權限較小,用token登陸後只能對default名稱空間有權限

[root@master1 pki]# kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://10.0.0.100:6443" --embed-certs=true --kubeconfig=/root/def-ns-admin.conf

Cluster "kubernetes" set.

[root@master1 pki]# kubectl config view --kubeconfig=/root/def-ns-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.100:6443

name: kubernetes

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

[root@master1 ~]# kubectl get secret

NAME TYPE DATA AGE

def-ns-admin-token-xdvx5 kubernetes.io/service-account-token 3 5d

[root@master1 ~]# kubectl describe secret def-ns-admin-token-xdvx5

Name: def-ns-admin-token-xdvx5

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: def-ns-admin

kubernetes.io/service-account.uid: 928bbca1-245c-11e9-81cc-000c291e37c2

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi14ZHZ4NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5MjhiYmNhMS0yNDVjLTExZTktODFjYy0wMDBjMjkxZTM3YzIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.EzUF13MElI8b-kuQNh_u1hGQpxgoffm4LdTVoeORKUBTADwqHEtW2arj76oZuI-wQyy5P0v5VvOoefr6h3NpIgbAze8Lqyrpg9wO0Crfi30IE1kZ2wUPYU9P_5inMxmCPLttppyPyc8mQKDkOOB1xFUmEebC63my-dG4CZljsd8zwNU6eXnhaThSUUn12UTvRsbSBLD-dvau1OY6YgDL6mgFl3cVqzCPd7ELpEyNYWCh3x5rcRfCcjcHGfUOrWjDzhgmH6sUiWb4gMHvSKgp-35rj5LXERfebse3OxSAXODJw9FhSn15VCmYcDmCJzMN83emFBwn0Y7bb11Y6M8CrQ

[root@master1 pki]# kubectl config set-credentials def-ns-admin --token=eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi14ZHZ4NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5MjhiYmNhMS0yNDVjLTExZTktODFjYy0wMDBjMjkxZTM3YzIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.EzUF13MElI8b-kuQNh_u1hGQpxgoffm4LdTVoeORKUBTADwqHEtW2arj76oZuI-wQyy5P0v5VvOoefr6h3NpIgbAze8Lqyrpg9wO0Crfi30IE1kZ2wUPYU9P_5inMxmCPLttppyPyc8mQKDkOOB1xFUmEebC63my-dG4CZljsd8zwNU6eXnhaThSUUn12UTvRsbSBLD-dvau1OY6YgDL6mgFl3cVqzCPd7ELpEyNYWCh3x5rcRfCcjcHGfUOrWjDzhgmH6sUiWb4gMHvSKgp-35rj5LXERfebse3OxSAXODJw9FhSn15VCmYcDmCJzMN83emFBwn0Y7bb11Y6M8CrQ --kubeconfig=/root/def-ns-admin.conf

User "def-ns-admin" set.

# 設置context

[root@master1 pki]# kubectl config set-context def-ns-admin@kubernetes --cluster=kubernetes --user=def-ns-admin --kubeconfig=/root/def-ns-admin.conf

Context "def-ns-admin@kubernetes" created.

# use-context

[root@master1 pki]# kubectl config use-context def-ns-admin@kubernetes --kubeconfig=/root/def-ns-admin.conf

Switched to context "def-ns-admin@kubernetes".

# 查看conf文件,此時已經完整了

[root@master1 pki]# kubectl config view --kubeconfig=/root/def-ns-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: def-ns-admin

name: def-ns-admin@kubernetes

current-context: def-ns-admin@kubernetes

kind: Config

preferences: {}

users:

- name: def-ns-admin

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi14ZHZ4NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5MjhiYmNhMS0yNDVjLTExZTktODFjYy0wMDBjMjkxZTM3YzIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.EzUF13MElI8b-kuQNh_u1hGQpxgoffm4LdTVoeORKUBTADwqHEtW2arj76oZuI-wQyy5P0v5VvOoefr6h3NpIgbAze8Lqyrpg9wO0Crfi30IE1kZ2wUPYU9P_5inMxmCPLttppyPyc8mQKDkOOB1xFUmEebC63my-dG4CZljsd8zwNU6eXnhaThSUUn12UTvRsbSBLD-dvau1OY6YgDL6mgFl3cVqzCPd7ELpEyNYWCh3x5rcRfCcjcHGfUOrWjDzhgmH6sUiWb4gMHvSKgp-35rj5LXERfebse3OxSAXODJw9FhSn15VCmYcDmCJzMN83emFBwn0Y7bb11Y6M8CrQ

拷貝到本地,使用conf文件登錄

第六部分:volume 存儲卷

k8s存儲卷有如下幾種類型:

emptyDir、hostPath、nfs、pvc、gitRepo

1.emptyDir

###這是掛載的demo

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: gcr.io/google_containers/test-webserver

name: test-container

volumeMounts:

- name: cache-volume

mountPath: /cache

volumes:

- name: cache-volume

emptyDir: {}

2.hostPaht

apiVersion: v1

kind: Pod

metadata:

name: pod-vol-hostpath

namespace: default

spec:

containers:

- name: busyboxvol

image: busybox:v12

volumeMounts:

- name: html

mountPath: /data/

volumes:

- name: html

hostPath:

path: /home/data/vol

type: DirectoryOrCreate

3.nfs需要用一臺機子做共享,共享目錄

a.yum install nfs-utils -y (在每個機上面安裝)

b.在要共享的主機上面創建 共享目錄 mkdir /data/volumes

c.配置共享目錄 vim /etc/exports

內容:/data/volumes 192.168.33.2/16(rw,no_root_squash)

d.在其他節點機子上面 mount -t nfs master:/data/volumes /mnt

最後 執行mount命令發現會有剛剛添加進去的共享目錄

master:/data/volumes on /mnt type nfs4 (rw,relatime,vers=4.1,rsize=524288,wsize=524288,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=192.168.33.35,local_lock=none,addr=192.168.33.52)

# 持久化掛接位置,在docker中

volumeMounts:

- name: redis-persistent-storage

mountPath: /data

volumes:

# 宿主機上的目錄

- name: redis-persistent-storage

nfs:

path: /k8s-nfs/redis/data

server: 192.168.8.150

4.pvc 部署難度有點大,暫時不做講解

參考地址:https://blog.csdn.net/shenhonglei1234/article/details/80803489