| 機器 | 操作系統 | IP | 安裝組件 | 版本 |

|---|---|---|---|---|

| psql1 | CentOS 7.3 | 10.10.0.1 | postgresql/etcd/patroni | 12.1/3.3.11/1.6.1 |

| psql2 | CentOS 7.3 | 10.10.0.2 | postgresql/etcd/patroni | 12.1/3.3.11/1.6.1 |

| psql3 | CentOS 7.3 | 10.10.0.3 | postgresql/etcd/patroni | 12.1/3.3.11/1.6.1 |

| haproxy1 | CentOS 7.3 | 10.10.0.4 | haproxy/keepalived | 1.5.18/2.0.20 |

| haproxy2 | CentOS 7.3 | 10.10.0.5 | haproxy/keepalived | 1.5.18/2.0.20 |

本文用到的所有文件

鏈接:https://pan.baidu.com/s/1lwaX_DuTcJwegLuJFZTH5w

提取碼:5615

1、基礎環境(所有節點)

1.1、修改主機名及hosts

hostnamectl set-hostname psql1

hostnamectl set-hostname psql2

hostnamectl set-hostname psql3

hostnamectl set-hostname haproxy1

hostnamectl set-hostname haproxy2

...

cat >> /etc/hosts <<EOF

10.10.0.1 psql1

10.10.0.2 psql2

10.10.0.3 psql3

10.10.0.4 haproxy1

10.10.0.5 haproxy2

EOF

1.2、修改系統進程打開最大文件數

echo "* soft nofile 655350" >> /etc/security/limits.conf

echo "* hard nofile 655350" >> /etc/security/limits.conf

1.3、關閉防火牆及selinux

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

1.4、配置yum源

此處配置離線yum源安裝,如果是外網環境直接yum安裝即可

unzip pgsql_yum.zip && rm -rf pgsql_yum.zip && mv pgsql_yum /

mkdir -p /etc/yum.repos.d/yum.bak && mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/yum.bak

cat > /etc/yum.repos.d/local.repo <<EOF

[local]

name=local

enabled=1

baseurl=file:///pgsql_yum/

gpgcheck=0

EOF

yum clean all && yum makecache

2、PostgreSQL(psql節點)

2.1、安裝依賴

yum -y install readline readline-devel zlib zlib-devel vim

2.2、安裝PostgreSQL

選擇空間較大的磁盤

tar -xvf postgresql-12.1.tar.gz && rm -rf postgresql-12.1.tar.gz && cd postgresql-12.1

./configure --prefix=/usr/local/pgsql

make && make install

3、Etcd(etcd節點)

3.1、安裝chrony同步集羣系統時間

建議集羣所有節點均安裝配置

- 安裝chrony

yum -y install chrony

- 啓動並加入開機自啓

systemctl start chronyd && systemctl enable chronyd && systemctl status chronyd

- 系統時鐘同步,以psql1機器爲時鐘服務器

vim /etc/chrony.conf

- 將以下內容註釋掉:

- 添加時鐘服務器配置,即psql1機器

server 10.10.0.1 iburst

- 強制同步時間

chronyc -a makestep

- 重啓service

systemctl daemon-reload && systemctl restart chronyd

3.2、安裝Etcd

yum -y install etcd

3.3、配置Etcd

vim /etc/etcd/etcd.conf

- etcd1(10.10.0.1)

ETCD_DATA_DIR="/var/lib/etcd/etcd1.etcd"

ETCD_LISTEN_PEER_URLS="http://10.10.0.1:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.10.0.1:2379,http://127.0.0.1:2379"

ETCD_NAME="etcd1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.10.0.1:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.0.1:2379"

ETCD_INITIAL_CLUSTER="etcd1=http://10.10.0.1:2380,etcd2=http://10.10.0.2:2380,etcd3=http://10.10.0.3:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

- etcd2(10.10.0.2)

ETCD_DATA_DIR="/var/lib/etcd/etcd2.etcd"

ETCD_LISTEN_PEER_URLS="http://10.10.0.2:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.10.0.2:2379,http://127.0.0.1:2379"

ETCD_NAME="etcd2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.10.0.2:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.0.2:2379"

ETCD_INITIAL_CLUSTER="etcd1=http://10.10.0.1:2380,etcd2=http://10.10.0.2:2380,etcd3=http://10.10.0.3:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

- etcd3(10.10.0.3)

ETCD_DATA_DIR="/var/lib/etcd/etcd3.etcd"

ETCD_LISTEN_PEER_URLS="http://10.10.0.3:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.10.0.3:2379,http://127.0.0.1:2379"

ETCD_NAME="etcd3"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.10.0.3:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.10.0.3:2379"

ETCD_INITIAL_CLUSTER="etcd1=http://10.10.0.1:2380,etcd2=http://10.10.0.2:2380,etcd3=http://10.10.0.3:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

3.4、修改etcd.service

vim /usr/lib/systemd/system/etcd.service

直接刪除原有內容,替換爲以下配置

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd \

--name=\"${ETCD_NAME}\" \

--data-dir=\"${ETCD_DATA_DIR}\" \

--listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\" \

--listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\" \

--initial-advertise-peer-urls=\"${ETCD_INITIAL_ADVERTISE_PEER_URLS}\" \

--advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\" \

--initial-cluster=\"${ETCD_INITIAL_CLUSTER}\" \

--initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\" \

--initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\""

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

3.5、啓動Etcd

systemctl daemon-reload && systemctl enable etcd && systemctl start etcd

3.6、驗證Etcd

- 任意節點查看集羣狀態

etcdctl cluster-health

etcdctl member list

4、Patroni(patroni節點)

4.1、安裝setuptools

unzip setuptools-42.0.2.zip && rm -rf setuptools-42.0.2.zip && cd setuptools-42.0.2

python setup.py install

4.2、安裝pip

tar -xvf pip-19.3.1.tar.gz && rm -rf pip-19.3.1.tar.gz && cd pip-19.3.1

python setup.py install

- 查看pip版本

pip -V

4.3、安裝patroni

- 如果安裝過程中提示缺少依賴可去pypi下載安裝即可

https://pypi.org/

yum -y install python-devel

pip install *.whl

pip install psutil-5.6.7.tar.gz

pip install cdiff-1.0.tar.gz

pip install python-etcd-0.4.5.tar.gz

pip install PyYAML-5.3.tar.gz

pip install prettytable-0.7.2.tar.gz

pip install patroni-1.6.1.tar.gz

4.4、查看patroni版本

patronictl version

4.5、配置patroni

4.5.1、集羣psql1節點配置

- 創建配置文件目錄

mkdir -p /data/patroni/conf

- 拷貝相關文件

unzip patroni.zip

cp -r patroni /data/patroni/conf/

cp /data/patroni/conf/patroni/postgres0.yml /data/patroni/conf/

- 編輯配置文件

vim /data/patroni/conf/postgres0.yml

scope: batman

#namespace: /service/

name: postgresql0

restapi:

listen: 10.10.0.1:8008

connect_address: 10.10.0.1:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

host: 10.10.0.1:2379

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

synchronous_mode: false

#standby_cluster:

#host: 127.0.0.1

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: logical

hot_standby: "on"

max_connections: 5000

wal_keep_segments: 1000

max_wal_senders: 10

max_replication_slots: 10

wal_log_hints: "on"

archive_mode: "on"

archive_timeout: 1800s

archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

recovery_conf:

restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 127.0.0.1/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 0.0.0.0/0 md5

- host all admin 0.0.0.0/0 md5

- host all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: postgres

options:

- createrole

- createdb

replicator:

password: replicator

options:

- replication

postgresql:

listen: 0.0.0.0:5432

connect_address: 10.10.0.1:5432

data_dir: /data/postgres

bin_dir: /usr/local/pgsql/bin

# config_dir:

# pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: replicator

superuser:

username: admin

password: postgres

# rewind: # Has no effect on postgres 10 and lower

# username: rewind_user

# password: rewind_password

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

4.5.2、集羣psql2節點配置

- 創建配置文件目錄

mkdir -p /data/patroni/conf

- 拷貝相關文件

unzip patroni.zip

cp -r patroni /data/patroni/conf/

cp /data/patroni/conf/patroni/postgres1.yml /data/patroni/conf/

- 編輯配置文件

vim /data/patroni/conf/postgres1.yml

scope: batman

#namespace: /service/

name: postgresql1

restapi:

listen: 10.10.0.2:8008

connect_address: 10.10.0.2:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

host: 10.10.0.2:2379

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

synchronous_mode: false

#standby_cluster:

#host: 127.0.0.1

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: logical

max_connections: 5000

hot_standby: "on"

wal_keep_segments: 1000

max_wal_senders: 10

max_replication_slots: 10

wal_log_hints: "on"

archive_mode: "on"

archive_timeout: 1800s

archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

recovery_conf:

restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 127.0.0.1/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 0.0.0.0/0 md5

- host all admin 0.0.0.0/0 md5

- host all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: postgres

options:

- createrole

- createdb

replicator:

password: replicator

options:

- replication

postgresql:

listen: 0.0.0.0:5432

connect_address: 10.10.0.2:5432

data_dir: /data/postgres

bin_dir: /usr/local/pgsql/bin

# config_dir:

# pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: replicator

superuser:

username: admin

password: postgres

# rewind: # Has no effect on postgres 10 and lower

# username: rewind_user

# password: rewind_password

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

4.5.3、集羣psql3節點配置

- 創建配置文件目錄

mkdir -p /data/patroni/conf

- 拷貝相關文件

unzip patroni.zip

cp -r patroni /data/patroni/conf/

cp /data/patroni/conf/patroni/postgres2.yml /data/patroni/conf/

- 編輯配置文件

vim /data/patroni/conf/postgres2.yml

scope: batman

#namespace: /service/

name: postgresql2

restapi:

listen: 10.10.0.3:8008

connect_address: 10.10.0.3:8008

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

etcd:

host: 10.10.0.3:2379

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

synchronous_mode: false

#standby_cluster:

#host: 127.0.0.1

#port: 1111

#primary_slot_name: patroni

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

wal_level: logical

max_connections: 5000

hot_standby: "on"

wal_keep_segments: 1000

max_wal_senders: 10

max_replication_slots: 10

wal_log_hints: "on"

archive_mode: "on"

archive_timeout: 1800s

archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

recovery_conf:

restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

# For kerberos gss based connectivity (discard @.*$)

#- host replication replicator 127.0.0.1/32 gss include_realm=0

#- host all all 0.0.0.0/0 gss include_realm=0

- host replication replicator 0.0.0.0/0 md5

- host all admin 0.0.0.0/0 md5

- host all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: postgres

options:

- createrole

- createdb

replicator:

password: replicator

options:

- replication

postgresql:

listen: 0.0.0.0:5432

connect_address: 10.10.0.3:5432

data_dir: /data/postgres

bin_dir: /usr/local/pgsql/bin

# config_dir:

# pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: replicator

superuser:

username: admin

password: postgres

# rewind: # Has no effect on postgres 10 and lower

# username: rewind_user

# password: rewind_password

# Server side kerberos spn

# krbsrvname: postgres

parameters:

# Fully qualified kerberos ticket file for the running user

# same as KRB5CCNAME used by the GSS

# krb_server_keyfile: /var/spool/keytabs/postgres

unix_socket_directories: '.'

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

4.6、修改目錄權限

- 記下data_dir上述yml配置文件中的值。該目錄需要確保postgres用戶具備寫入的權限。如果此目錄不存在,則創建,在所有patroni節點分別進行如下操作

groupadd postgres

useradd -g postgres postgres

chown -R postgres /usr/local/pgsql

mkdir -p /data/postgres

chown -Rf postgres:postgres /data/postgres

chmod 700 /data/postgres

4.7、啓動patroni

在psql1節點執行

chown -Rf postgres:postgres /data/patroni/conf

- 創建service文件,修改其中的可執行目錄及配置文件

cat > /etc/systemd/system/patroni.service <<EOF

[Unit]

Description=Runners to orchestrate a high-availability PostgreSQL

After=network.target

[Service]

Type=simple

User=postgres

Group=postgres

ExecStart=/usr/bin/patroni /data/patroni/conf/postgres0.yml

KillMode=process

TimeoutSec=30

Restart=no

[Install]

WantedBy=multi-user.target

EOF

- 啓動patroni初始化數據庫

systemctl daemon-reload && systemctl start patroni && systemctl enable patroni

- 切換到postgres用戶查看patroni是否託管數據庫

su postgres

/usr/local/pgsql/bin/psql -h 127.0.0.1 -U admin postgres

在psql2節點執行

chown -Rf postgres:postgres /data/patroni/conf

- 創建service文件,修改其中的可執行目錄及配置文件

cat > /etc/systemd/system/patroni.service <<EOF

[Unit]

Description=Runners to orchestrate a high-availability PostgreSQL

After=network.target

[Service]

Type=simple

User=postgres

Group=postgres

ExecStart=/usr/bin/patroni /data/patroni/conf/postgres1.yml

KillMode=process

TimeoutSec=30

Restart=no

[Install]

WantedBy=multi-user.target

EOF

- 啓動patroni初始化數據庫

systemctl daemon-reload && systemctl start patroni && systemctl enable patroni

- 切換到postgres用戶查看patroni是否託管數據庫

su postgres

/usr/local/pgsql/bin/psql -h 127.0.0.1 -U admin postgres

在psql3節點執行

chown -Rf postgres:postgres /data/patroni/conf

- 創建service文件,修改其中的可執行目錄及配置文件

cat > /etc/systemd/system/patroni.service <<EOF

[Unit]

Description=Runners to orchestrate a high-availability PostgreSQL

After=network.target

[Service]

Type=simple

User=postgres

Group=postgres

ExecStart=/usr/bin/patroni /data/patroni/conf/postgres2.yml

KillMode=process

TimeoutSec=30

Restart=no

[Install]

WantedBy=multi-user.target

EOF

- 啓動patroni初始化數據庫

systemctl daemon-reload && systemctl start patroni && systemctl enable patroni

- 切換到postgres用戶查看patroni是否託管數據庫

su postgres

/usr/local/pgsql/bin/psql -h 127.0.0.1 -U admin postgres

4.8、查看集羣

- 任意節點查看即可

patronictl -c /data/patroni/conf/postgres0.yml list

- 如果需要切換master,運行如下命令即可

patronictl -c /data/patroni/conf/postgres0.yml switchover

5、HAProxy(HAProxy節點)

5.1、安裝haproxy

各節點配置相同

yum -y install haproxy

5.2、修改配置文件

- 備份原有配置文件

cp -r /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_bak

- 修改配置文件,直接替換爲新的配置,修改其中的IP、端口、頁面用戶名密碼

cat > /etc/haproxy/haproxy.cfg <<EOF

#---------------------------------------------------------------------

# 全局定義

global

# log語法:log [max_level_1]

# 全局的日誌配置,使用log關鍵字,指定使用127.0.0.1上的syslog服務中的local0日誌設備,

# 記錄日誌等級爲info的日誌

# log 127.0.0.1 local0 info

log 127.0.0.1 local1 notice

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

# 定義每個haproxy進程的最大連接數 ,由於每個連接包括一個客戶端和一個服務器端,

# 所以單個進程的TCP會話最大數目將是該值的兩倍。

maxconn 4096

# 用戶,組

user haproxy

group haproxy

# 以守護進程的方式運行

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# 默認部分的定義

defaults

# mode語法:mode {http|tcp|health} 。http是七層模式,tcp是四層模式,health是健康檢測,返回OK

mode tcp

# 使用127.0.0.1上的syslog服務的local3設備記錄錯誤信息

log 127.0.0.1 local3 err

#if you set mode to http,then you nust change tcplog into httplog

option tcplog

# 啓用該項,日誌中將不會記錄空連接。所謂空連接就是在上游的負載均衡器或者監控系統爲了

#探測該服務是否存活可用時,需要定期的連接或者獲取某一固定的組件或頁面,或者探測掃描

#端口是否在監聽或開放等動作被稱爲空連接;官方文檔中標註,如果該服務上游沒有其他的負

#載均衡器的話,建議不要使用該參數,因爲互聯網上的惡意掃描或其他動作就不會被記錄下來

option dontlognull

# 定義連接後端服務器的失敗重連次數,連接失敗次數超過此值後將會將對應後端服務器標記爲不可用

retries 3

# 當使用了cookie時,haproxy將會將其請求的後端服務器的serverID插入到cookie中,以保證

#會話的SESSION持久性;而此時,如果後端的服務器宕掉了,但是客戶端的cookie是不會刷新的

#,如果設置此參數,將會將客戶的請求強制定向到另外一個後端server上,以保證服務的正常

option redispatch

#等待最大時長 When a server's maxconn is reached, connections are left pending in a queue which may be server-specific or global to the backend.

timeout queue 1m

# 設置成功連接到一臺服務器的最長等待時間,默認單位是毫秒

timeout connect 1m

# 客戶端非活動狀態的超時時長 The inactivity timeout applies when the client is expected to acknowledge or send data.

timeout client 15m

# Set the maximum inactivity time on the server side.The inactivity timeout applies when the server is expected to acknowledge or send data.

timeout server 15m

timeout check 30s

maxconn 5120

#---------------------------------------------------------------------

# 配置haproxy web監控,查看統計信息

listen status

bind 0.0.0.0:1080

mode http

log global

stats enable

# stats是haproxy的一個統計頁面的套接字,該參數設置統計頁面的刷新間隔爲30s

stats refresh 30s

stats uri /haproxy-stats

# 設置統計頁面認證時的提示內容

stats realm Private lands

# 設置統計頁面認證的用戶和密碼,如果要設置多個,另起一行寫入即可

stats auth admin:Gsld1234!

# 隱藏統計頁面上的haproxy版本信息

# stats hide-version

#---------------------------------------------------------------------

listen master

bind *:5000

mode tcp

option tcplog

balance roundrobin

option httpchk OPTIONS /master

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server node1 10.10.0.1:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

server node2 10.10.0.2:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

server node3 10.10.0.3:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

listen replicas

bind *:5001

mode tcp

option tcplog

balance roundrobin

option httpchk OPTIONS /replica

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server node1 10.10.0.1:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

server node2 10.10.0.2:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

server node3 10.10.0.3:5432 maxconn 1500 check port 8008 inter 5000 rise 2 fall 2

EOF

5.3、啓動

systemctl start haproxy && systemctl enable haproxy && systemctl status haproxy

5.4、頁面訪問

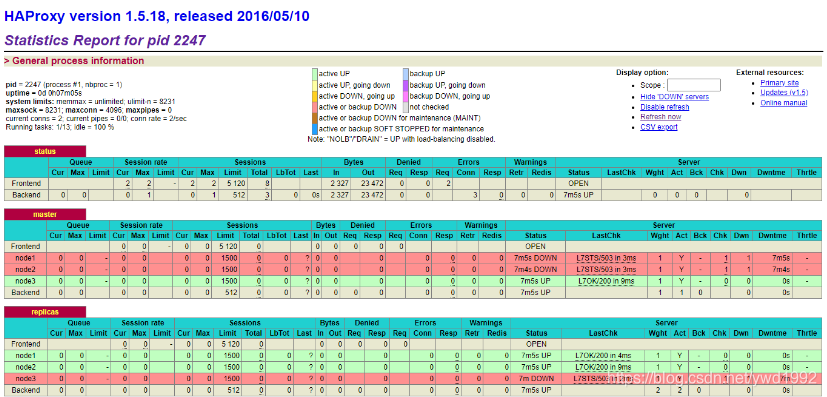

瀏覽器訪問http://10.10.0.4:1080/haproxy-stats,輸入前面配置文件中設置的用戶名密碼即可進入,這裏是admin/Gsld1234!

- 頁面中我們可以看到當前的主節點及從節點

- 我們通過5000端口和5001端口分別來提供寫服務和讀服務,如果需要對數據庫寫入數,只需要對外提供10.10.0.4:5000即可,可以模擬主庫故障,即關閉其中的master節點來驗證是否會進行自動主從切換

6、Keepalived(Keepalived節點)

6.1、安裝依賴

yum -y install openssl-devel

6.2、獲取Keepalived

https://www.keepalived.org/download.html

6.3、安裝

tar -xvf keepalived-2.0.20.tar.gz && rm -rf keepalived-2.0.20.tar.gz && cd keepalived-2.0.20

./configure --prefix=/usr/local/keepalived

make && make install

6.4、版本查看

/usr/local/keepalived/sbin/keepalived -v

6.5、配置文件

- 配置文件參數詳解

https://www.cnblogs.com/arjenlee/p/9258188.html

- 創建配置文件目錄及配置文件

mkdir -p /etc/keepalived

自帶配置文件:/usr/local/keepalived/etc/keepalived/keepalived.conf,可作參考,這裏直接創建新的配置文件即可

- 主服務器,即haproxy1

vim /etc/keepalived/keepalived.conf

global_defs {

router_id haproxy1

}

vrrp_script haproxy_check {

script "/usr/local/keepalived/check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 80

advert_int 1

track_script {

haproxy_check

}

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.10.0.8

}

}

- 從服務器,即haproxy2

vim /etc/keepalived/keepalived.conf

global_defs {

router_id haproxy2

}

vrrp_script haproxy_check {

script "/usr/local/keepalived/check.sh"

interval 2

weight:-20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 70

advert_int 1

track_script {

haproxy_check

}

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.10.0.8

}

}

- vrrp_script:檢查腳本,檢查haproxy狀態,如果掛掉,VIP漂移

- script:腳本命令

- interval:檢查間隔

- weight:權重

- state:標記該節點是master還是backup

- interface:配置VIP綁定的網卡,這裏使用和外網通信的網卡

- virtual_router_id:取1-255之間的值,主備需要相同,這樣才能成爲一個組

- priority:權重,數值高的主機是master,所以主節點要比從節點大,這是影響主備的關鍵

- advert_int:主備之間通訊的間隔秒數,用於判斷主節點是否存活

- auth_type:進行安全認證的方式,PASS或者AH方式,推薦PASS

- auth_pass:PASS的密碼

- virtual_ipaddress:VIP地址,最多可以寫20個,keepalive啓動後會自動配置該VIP

6.6、檢測腳本

放置到配置文件所指定目錄並添加權限

vim /usr/local/keepalived/check.sh

#!/bin/bash

count=`ps aux | grep -v grep | grep haproxy | wc -l`

if [ $count -eq 0 ]; then

exit 1

else

exit 0

fi

chmod +x /usr/local/keepalived/check.sh

6.7、啓動

- 創建service文件

cat > /etc/systemd/system/keepalived.service <<EOF

[Unit]

Description=LVS and VRRP High Availability Monitor

After=syslog.target network-online.target

[Service]

Type=forking

KillMode=process

ExecStart=/usr/local/keepalived/sbin/keepalived

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable keepalived && systemctl start keepalived && systemctl status keepalived

6.8、主從查看

在主節點查看網絡信息,可以發現我們設置的VIP,當主節點、haproxy、keepalived任意一個掛掉時,VIP都會自動漂移到從節點實現高可用

![[外鏈圖片轉存失敗,源站可能有防盜鏈機制,建議將圖片保存下來直接上傳(img-5vcA8WZE-1583740056005)(FE2C4B0EF49342B199BBE1A0D7CBE60E)]](https://pic1.xuehuaimg.com/proxy/csdn/https://img-blog.csdnimg.cn/20200309155154329.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3l3ZDE5OTI=,size_16,color_FFFFFF,t_70)